VMware Data Services Manager enables you to manage disaster recovery operations of the Provider VMs, Agent VMs, and Database VMs through the Site Recovery Manager (SRM). Availability of the disaster recovery feature enables you to maintain business continuity from a Recovery site even if the Primary site goes down due to a power outage or some other calamity.

If the Primary site is down (caused by power outage, network issues, storage issues, and so on), a Recovery site allows business continuity until the Primary site is up again. When the Primary site is up, business as usual continues with all the functionalities running from the Primary site.

Prerequisites

SRM uses vSphere replication appliance to provide disaster recovery for VMs in VMware Data Services Manager. The following list provides the prerequisites for vSphere replication and for the smooth functioning of disaster recovery:

- Configuration of an SRM appliance and a vSphere replication appliance per site.

- Configuration of a network to which both the SRM and vSphere replication appliances are connected and the appliances should connect to the vCenter of the site through a static IP or through DHCP.

- Primary site and Recovery site should be connected to a low latency network to minimise the lag for replication from the Primary site to the Recovery site.

- Configuration of datastores in the Recovery site.

- Network configurations for a VMware Data Services Manager setup must be similar on both Primary and Recovery sites. Same subnets should be used on both the sites for the networks used in VMware Data Services Manager setup. For example, if you use 192.168.100.0/24 for VMware Data Services Manager Management network on the Primary site, then you can use 192.168.100.0/24 for VMware Data Services Manager Management network on the Recovery site as well. To understand more about network configurations in VMware Data Services Manager, see Network Configuration Requirements.

Achieving Disaster Recovery Through SRM

After you have configured VMware Data Services Manager and created databases, as required, in the Primary site, the major steps to configure SRM for disaster recovery are as follows, and for more details, see SRM documentation.

Step 1: Install SRM and and vSphere replication appliances in the Primary site and the Recovery site:

- Create Replication VMs in the Recovery site.

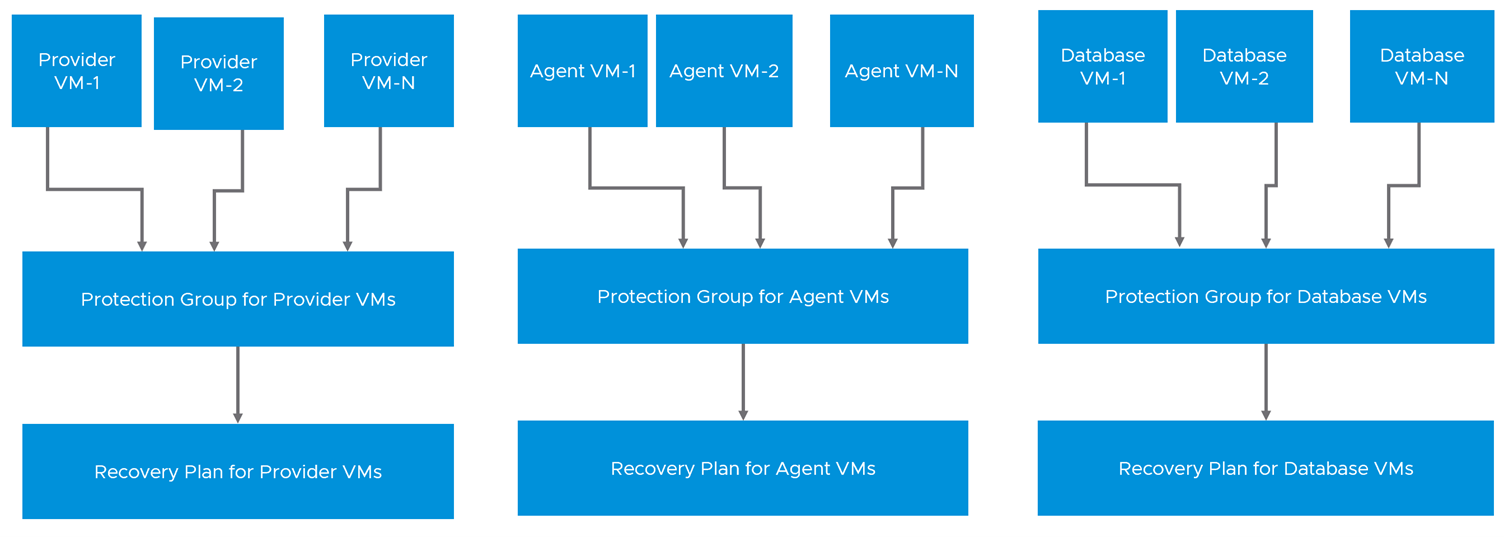

- Create Protection Groups, for example, one for Provider VMs, one for Agent VMs, and one for database VMs.

- Create Recovery Plans that are mapped to Protection Groups, for example, one for Provider VMs mapped to the Protection Group of the Provider VMs, one for Agent VMs mapped to the Protection Group of the Agent VMs, and one for database VMs mapped to the Protection Group of the database VMs.

Step 2: Configure prerequisites (mentioned in the Prerequisites section).

Step 3:(Optional) Test the disaster recovery setup from the Primary site to the Recovery site to ensure that it is running smoothly, and then fix errors, if any.

Step 4:After the Primary site goes down, to ensure business continuity from the Recovery site, run the Recovery Plans starting with the Provider recovery, followed by the Agent recovery, and finally the database recovery.

Step 5:(Recommended) After Primary site is up, perform recovery from the Recovery site to the Primary site. Perform recovery of the Provider VMs, followed by the Agent VM, and finally the database VMs. Start performing all the VMware Data Services Manager functionalities from the Primary site again and be prepared to run Recovery plans when the Primary site goes down again.

Additional Steps For MySQL Database Clusters

After running the recovery plans to recover from the Primary site to the Recovery site, you need to perform few steps for MySQL database clusters. Also, when the Primary site is up again, the same steps needs to be performed when you want to recover back to the Primary site from the Recovery site.

If a MySQL database cluster has three nodes, and for example, the new Application network IP addresses assigned to each node is as follows:

| Network | IP Address |

|---|---|

| Cluster | 192.168.20.100/24 |

| Primary Node Application Network | 192.168.20.101/24 |

| Secondary Node 1 Application Network | 192.168.20.102/24 |

| Secondary Node 2 Application Network | 192.168.20.103/24 |

Modify the

/etc/keepalived/keepalived.conffile on each node of a MySQL cluster as follows:Sample

keepalived.conffile for Primary node:# keep rest of the content here as-is vrrp_instance VRRP1 { # keep rest of the contents here as-is # change start unicast_src_ip 192.168.20.101 # Application Network IP address of the current Node unicast_peer { 192.168.20.102 # Application Network IP address of Secondary Node 1 192.168.20.103 # Application Network IP address of Secondary Node 2 } virtual_ipaddress { 192.168.20.100/24 # Virtual/Cluster IP } # change end # keep rest of the content here as-is }Sample

keepalived.conffile for Secondary Node 1:# keep rest of the contents here as-is vrrp_instance VRRP1 { # keep rest of the content here as-is # change start unicast_src_ip 192.168.20.102 # Application Network IP address of the current Node unicast_peer { 192.168.20.101 # Application Network IP address of the Primary Node 192.168.20.103 # Application Network IP addressp of the Secondary Node 2 } virtual_ipaddress { 192.168.20.100/24 # Virtual/Cluster IP } # change end # keep rest of the contents here as-is }Sample

keepalived.conffile for Secondary Node 2:# keep rest of the contents here as-is vrrp_instance VRRP1 { # keep rest of the content here as-is # change start unicast_src_ip 192.168.20.103 # Application Network IP address of the current Node unicast_peer { 192.168.20.101 # Application Network IP address of the Primary Node 192.168.20.102 # Application Network IP addressp of the Secondary Node 1 } virtual_ipaddress { 192.168.20.100/24 # Virtual/Cluster IP } # change end # keep rest of the contents here as-is }Restart

keepalived.serviceon all nodes of the MySQL database cluster.Run the following MySQL shell commands to bring back the cluster from complete outage:

Command Purpose jq . /var/dbaas/configure/input.jsonTo get root user password for any database vm in the given cluster mysqlsh root@<cluster-ip> -- dba reboot-cluster-from-complete-outage Cluster1To reboot the cluster. This command needs to be run from a VM that can resolve FQDN of all members of the MySQL database cluster. mysqlsh root@<cluster-ip> -- cluster statusTo validate the status of the cluster. After running the

mysqlsh root@<cluster-ip> -- cluster statusMySQL shell command, validate that roles of the cluster members are correctly assigned and the status of the cluster is ONLINE.

Tasks Allowed On the Recovery Site

To ensure business continuity when the Primary site is down, there are certain tasks that you can perform on the Recovery site and they are as follows:

- Recovery site ensures business continuity because databases work with same FQDN in the Primary site and the Recovery site.

- Database backups work.

- Monitoring activities work.

- Operations that only involve the Provider VMs, for example:

- Creating or updating an organization

- Creating or updating users

- Creating or updating instance plans.

- Publishing or unpublishing templates

- Creating or updating storage settings

- Database recovery

- Manual and automated promotion of database replicas.

Tasks Not Allowed On the Recovery Site

There are certain tasks that you cannot perform on the Recovery site and they are as follows:

- Restoring, PITR from backups, cloning of databases.

- Creating new database VMs

- Creating database replicas

- Any operation that requires Connectivity to the Primary site, for example, updating environment details, sync VC resource, changing compute resources, extending disk capacities, onboarding Provider environment, onboarding Agent, and recovering Agent, and so on.