This section focuses on the architecture of the virtual infrastructure layer elements, including the hypervisor (ESXi) and the software-defined networking and storage elements.

A listing of the components used to build the VVS reference architecture are as follows:

vSphere hypervisor

vSphere virtual networking

vSphere datastores

ESXi Hypervisor

A type 1, real-time hypervisor is required for critical workloads such as utility system protection applications. Beginning with ESXi 7.0, Update 2, VMware vSphere provides these features as I/O latency and jitter optimizations are made to provide consistent runtime. Long-term testing results have proven ESXi performance, configured with VM Latency Sensitivity set to High and leveraging PCI passthrough data, to be nearly identical to bare metal. And these results remain true even when adjacent, non-real-time workloads are implemented to run next to the critical VMs.

Tuning and performance testing of reference architectures is detailed in Virtual Machine Configuration for Performance.

Virtual Networking

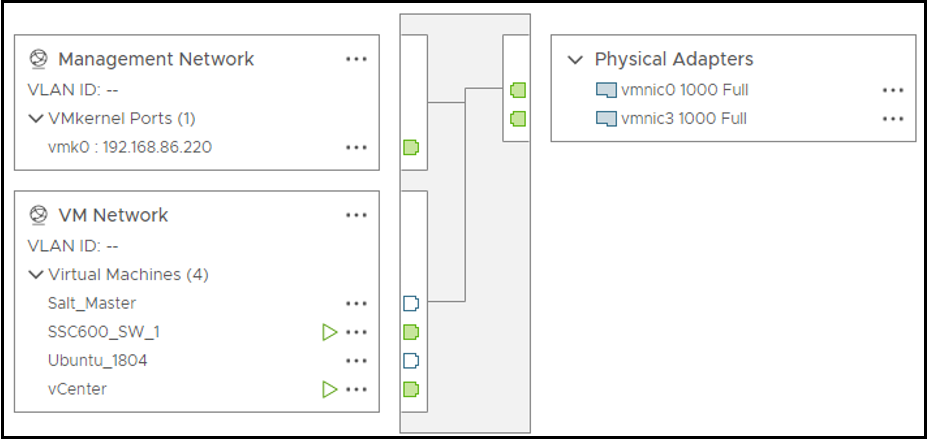

As indicated in the VVS reference architecture (VVS Reference Architecture), the virtual networking required for a vPAC application has two parts. The process bus connection is provided with a dedicated PCI-passthrough NIC, which is associated to the VM at the time of its installation (see Configuring vSAN). It provides consistent, low-latency networking performance to be used for critical traffic such as IEC 61850 GOOSE, SV, and PTP.

The vPAC application also requires a station bus connection, which can be made through a vSphere Standard Switch. VSS configuration is performed for each host and can be done within ESXi or vCenter GUIs. Details on capabilities and settings can be found under vSphere Networking on docs.vmware.com. These include VLAN segmentation, 802.1Q tagging, and teaming or failover.

VMware recommends vSphere Distributed Switches (VDSs) when clustering servers. VDS provides centralized management and monitoring for all ESXi hosts connected. Beyond the features mentioned for the VSS, a VDS also offers inbound or outbound traffic shaping, VM port blocking, private VLANs, load-based teaming, vMotion, port mirroring, virtual switch APIs, and Link Aggregation Control Protocol (LACP) support.

Within vSphere, LACP can be used to configure Link Aggregation Groups (LAGs) made up of multiple ports. This offers increased bandwidth and redundancy for the physical ports that are included. The LAG can also be used within vCenter settings for teaming and failover, with built-in step-by-step migration guidance.

Traffic facilitated by the station bus connection to the vPAC application is general management (native graphical user interface or command line interface, CLI), data protocols such as IEC 61850 MMS or distributed network protocol, system timing, and VMware administration. Go to ports.esp.vmware.com for listing of services or ports for VMware products.

The VMkernel networking layer provides connectivity from VMs to a host for core processes. Additionally, it enables services such as vMotion, IP Storage, Fault Tolerance (FT), vSAN, and other management and administrative functions. VMware recommends dedicating a separate VMkernel adapter along with a unique VLAN for each traffic type.

For clustering hosts, VMware recommends using distributed switches with individual port groups for each VMkernel adapter. Examples of segregated traffic within the vPAC reference architecture include Management, vMotion, and vSAN communications, as shown in the following table.

Traffic Type |

Comments |

Network Requirements |

|---|---|---|

Management |

vCenter to ESXi, infrastructure VMs access, IPMI hardware interface, vSAN witness (when used). |

Less than or equal to 500 ms, round-trip time greater than or equal to 2 Mbps bandwidth for every 1000 vSAN components. |

vSAN |

vSAN services |

Less than or equal to 1 ms, round-trip time greater than or equal to 10 Gbps bandwidth (all-flash configurations). Recommend MTU of 9000 to support jumbo frames. |

vMotion |

vMotion services |

Less than or equal to 150 ms, round-trip time greater than or equal to 250 Mbps bandwidth for each concurrent session. Recommend MTU of 9000 to support jumbo frames. |

|

Note:

VMware recommends using LACP/LAG or NIC teaming/failover mechanisms for added bandwidth and redundancy. |

||

Further details on virtual networking configuration design decisions are explained in the following table.

Decision ID |

Design Decision |

Design Justification |

Design Implication |

|---|---|---|---|

VPAC-VNET-001 |

Passing critical process bus traffic directly to VMs, bypassing virtual switches. |

Maximum network performance is achieved today by providing VMs with direct access to NICs facilitating critical traffic. |

Each VM consuming process bus traffic must be assigned a physical NIC port (or PRP aggregated DAN port). |

VPAC-VNET-002 |

Virtual networking redundancy (separate physical NICs) to include teaming and failover configuration. |

The highest levels of availability are achieved when the virtual network is designed without any single points of failure. |

Additional resources must be planned for the physical compute and extra loading required for layered redundancy. |

VPAC-VNET-003 |

Virtual switch type, distributed versus standard. |

Distributed switches offer additional features and can be centrally managed with vCenter. |

vCenter must be available to setup and maintain distributed switches. |

VPAC-VNET-004 |

Network security and isolation. |

Station bus traffic benefits from VLAN segmentation. vSAN and VMotion traffic benefits from physical isolation, dedicating high bandwidth (vMotion traffic not encrypted). |

Additional design and configuration effort is required, making the system more complex. |

Do not set connection limits and timeouts between products (ESXi, vCSA), which can affect the packet flow and cause interruption of services.

Isolate networks (such as server LOM, ESXi, vMotion, vSAN) to improve security and performance.

Dedicate physical NICs to VM groups or use network I/O control and traffic shaping to guarantee bandwidth. This separation also enables distributing a portion of the total networking workload across multiple CPUs. Isolated VMs have higher performance in application traffic.

Create a vSphere Standard or Distributed virtual switch for each type of service. If this is not possible, separate network services on a single switch by attaching them to port groups with different VLAN IDs.

Keep the vSphere vMotion connection on a separate network, either by using VLANs to segment a single physical network or, preferably, by using separate physical networks.

For migration across IP subnets and for using separate pools of buffer and sockets, place the traffic for vMotion on the vMotion TCP/IP stack, and the traffic for migration of powered-off virtual machines and cloning on the provisioning TCP/IP stack.

Network adapters can be added or removed from standard or distributed vSwitches without affecting the VMs or the network service that is running behind the switch. If all running hardware is removed, the VMs can still communicate with each other. If one network adapter is left intact, all the virtual machines can still connect with the physical network.

To protect the most sensitive VMs, deploy firewalls when routed between virtual networks with uplinks to physical networks and pure virtual networks with no uplinks.

Physical network adapters connected to the same vSphere Standard or Distributed switch must also be connected to the same physical network.

Configure the same MTU setting for all VMkernel network adapters in a vSphere Distributed Switch, otherwise network connectivity problems can occur.

There are additional best practices related to networks which incorporate HA or FT. Fore more information, go to docs.vmware.com and search for vSphere availability best practices for networking.

Software-Defined Storage

A datastore is a logical storage unit using disk space on one or more physical devices. These are logical containers providing a uniform model for storing virtual machine files. vSphere offers several different datastore types, as shown in the following table.

Datastore Type |

Storage Protocol |

Boot from SAN Support |

VSphere vMotion Support |

VSphere HA Support |

VSphere DRS Support |

|---|---|---|---|---|---|

NFS |

NFS |

No |

Yes |

Yes |

Yes |

Virtual Volumes |

FC/Ethernet (iSCSI, NFS) |

No |

Yes |

Yes |

Yes |

VMFS |

Fiber Channel |

Yes |

Yes |

Yes |

Yes |

FCoE |

Yes |

Yes |

Yes |

Yes |

|

iSCSI |

Yes |

Yes |

Yes |

Yes |

|

iSER/NVMe-oF (RDMA) |

No |

Yes |

Yes |

Yes |

|

DAS (SAS, SATA, NVMe) |

N/A |

Yes |

No |

No |

|

vSAN |

vSAN |

No |

Yes |

Yes |

Yes |

NFS and virtual volumes are out of scope for this guide. For more information, see vSphere documentation.

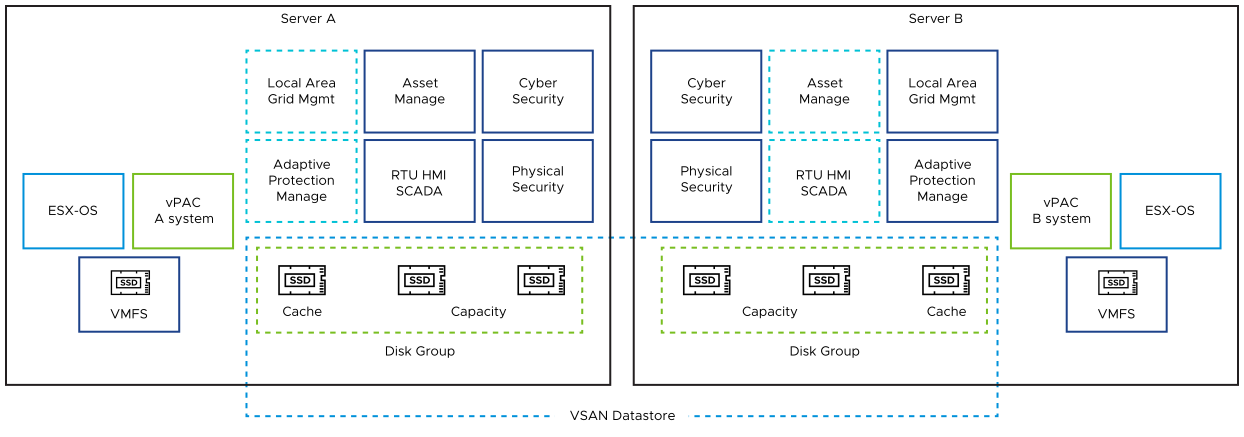

VMFS is an automated file system simplifying high-performance storage management for VMs. Multiple VMs can be stored in the same VMFS datastore, encapsulated within a set of files, but with locking mechanisms to allow safe operation within a networked storage environment (for example, multiple ESXi hosts sharing a datastore). The VVS reference architecture makes use of VMFS datastores for the installation of real-time workloads (vPR).

VMware vSAN (ESXi-native, virtual networked storage) offers the ability to pool together the local disks of individual servers to form a shared datastore. This shared datastore provides software RAID options to establish elevated levels of failure tolerance. Additionally, vSAN can provide data deduplication and compression, and data encryption at-rest and in-motion.

The VVS reference architecture makes use of vSAN for all non-real-time workloads (vAC). Further developments are made for real-time workloads to additionally be facilitated on vSAN datastores in the future. For more information on vSAN requirements, see ECS Overview.

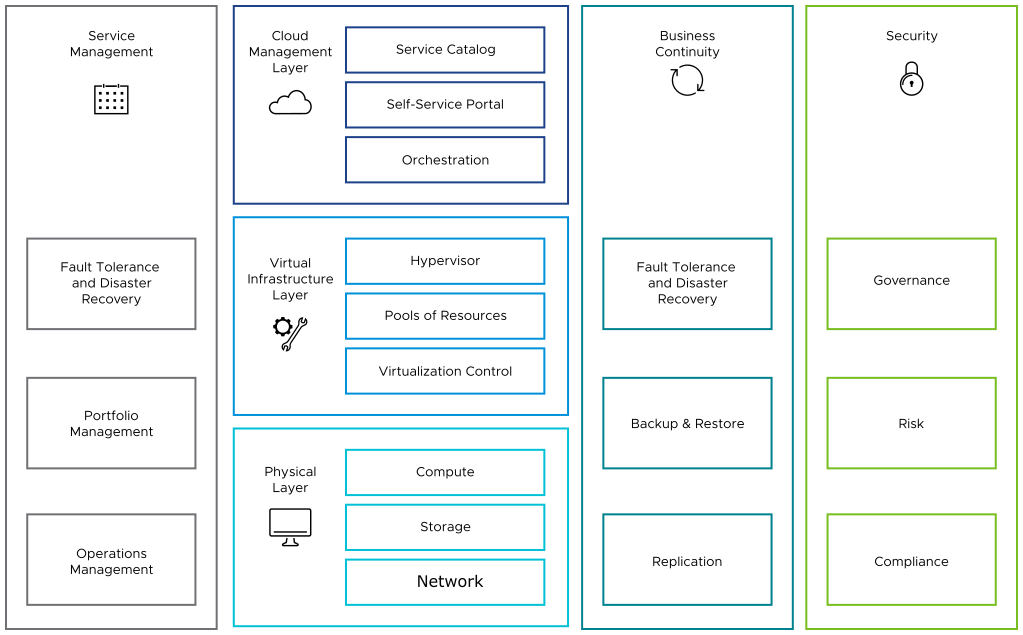

Additional Components of Enterprise Architecture

The physical and virtual infrastructure layers are introduced, and are the components found at the edge. The remaining supporting elements of a complete enterprise architecture are remote management and service administration, business continuity, and the overall security measures implemented.

These components are briefly introduced in this section and the appropriate VMware links are provide for more information.