- Version 3.9

- Release Notes

- Overview

- Installing Tanzu Greenplum Text

- Upgrading Tanzu Greenplum Text

- Downgrading Tanzu Greenplum Text

- Monitoring Tanzu Greenplum Text

- Administering Tanzu Greenplum Text

- High Availability

- Best Practices

- Troubleshooting Hadoop Connection Problems

- Working With Indexes

- Querying Indexes

- Customizing Indexes

- Working With External Indexes

- Using Named Entity Recognition

- Functions

- Management Utilities

- Solr Data Type Mappings

- Schema Tables

- Configuration Parameters

The Tanzu Greenplum Text high availability feature ensures that you can continue working with Tanzu Greenplum Text indexes as long as each shard in the index has at least one working replica.

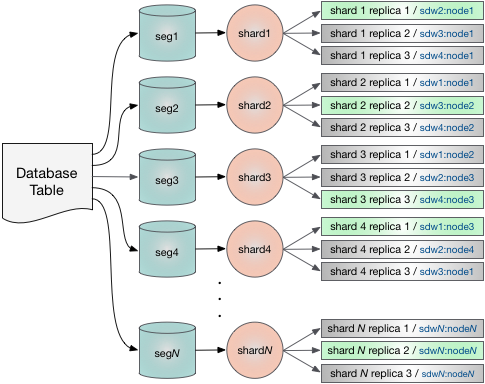

A Tanzu Greenplum Text index has one shard for each Greenplum segment, so there is a one-to-one correspondence between Greenplum segments and Tanzu Greenplum Text index shards. The shard managed by a Greenplum segment is an index of the documents that are managed by that segment.

The Tanzu Greenplum Text high availability mechanism is to maintain multiple copies, or replicas, of the shard. The ZooKeeper service that manages SolrCloud chooses a Tanzu Greenplum Text instance (SolrCloud node) for each replica to ensure even distribution and high availability. For each shard, one replica is elected leader and the Greenplum segment associated with the shard operates on this leader replica. The Tanzu Greenplum Text instance managing the lead replica may or may not be on another Greenplum host, so indexing and searching operations are passed over the Greenplum cluster's interconnect network. SolrCloud replicates changes made to the leader replica to the remaining replicas.

The following figure illustrates the relationships between Greenplum segments and Tanzu Greenplum Text index shards and replicas. The leader replica for each shard is shown in green and the followers are gray.

The number of replicas to create for each shard, the replication factor, is a SolrCloud property. By default, Tanzu Greenplum Text starts SolrCloud with a replication factor of two. The replication factor for each individual index is the value of the SolrCloud replication factor when the index is created. Changing the replication factor does not alter the replication factor for existing indexes.

Greenplum Segment or Host Failure

If a Greenplum primary segment fails and its mirror is activated, Tanzu Greenplum Text functions and utilities continue to access the leader replica. No intervention is needed.

If a host in the cluster fails, both Greenplum and Tanzu Greenplum Text are affected. Mirrors for the Greenplum primary segments located on the failed host are activated on other hosts. SolrCloud elects a new leader replica for affected shards. Because Greenplum segment mirrors and Tanzu Greenplum Text shard replicas are distributed throughout the cluster, a single host failure should not prevent the cluster from continuing to operate. The performance of database queries and indexing operations will be affected until the failed host is recovered and the cluster is brought back into balance.

ZooKeeper Cluster Availability

SolrCloud is dependent on a working, available ZooKeeper cluster. For ZooKeeper to be active, a majority of the ZooKeeper cluster nodes must be up and able to communicate with each other. A ZooKeeper cluster with three nodes can continue to operate if one of the nodes fails, since two is a majority of three. To tolerate two failed nodes, the cluster must have at least five nodes so that the number of working nodes remaining after the failure are a majority. To tolerate n node failures, then, a ZooKeeper cluster must have 2n+1 nodes. This is why ZooKeeper clusters usually have an odd number of nodes.

The best practice for a high-availability Tanzu Greenplum Text cluster is a ZooKeeper cluster with five or seven nodes so that the cluster can tolerate two or three failed nodes.

Managing Tanzu Greenplum Text Cluster Health

Tanzu Greenplum Text document indexing and searching services remain available as long as each shard of an index has at least one working replica. To ensure availability in the event of a failure, it is important to monitor the status of the cluster and ensure that all of the index shard replicas are healthy. You can monitor the SolrCloud cluster and indexes using the SolrCloud Dashboard or using Tanzu Greenplum Text functions and management utilities. Access the SolrCloud Dashboard with a web browser on any Tanzu Greenplum Text instance with a URL such as http://sdw3:18983/solr. (The port numbers for Tanzu Greenplum Text instances are set with the GPTEXT_PORT_BASE parameter in the installation parameters file at installation time.)

Refer to the Apache SolrCloud documentation for help using the SolrCloud Dashboard.

Monitoring the Cluster with Tanzu Greenplum Text

The Tanzu Greenplum Text gptext-state management utility allows you to query the state of the Tanzu Greenplum Text cluster and indexes. You can also use gptext.index_status() to view the status of all indexes or a specified index.

To see the Tanzu Greenplum Text cluster state run the gptext-state command-line utility with the -d option to specify a database that has the Tanzu Greenplum Text schema installed.

gptext-state -d mydb

The utility reports any Tanzu Greenplum Text nodes that are down and lists the status of every Tanzu Greenplum Text index. For each index, the database name, index name, and status are reported. The status column contains "Green", "Yellow", or "Red":

- Green – all replicas for all shards are healthy

- Yellow – all shards have at least one healthy replica but at least one replica is down

- Red – no replicas are available for at least one index shard

To see the distribution of index shards and replicas in the Tanzu Greenplum Text cluster, execute this SQL statement.

SELECT index_name, shard_name, replica_name, node_name FROM gptext.index_summary() ORDER BY node_name;

To list all Tanzu Greenplum Text indexes, run the gptext-state list command.

gptext-state list -d mydb

The gptext-state healthcheck command checks the health of the cluster. The -f flag specifies the percentage of available disk space required to report a healthy cluster. The default is 10.

gptext-state healthcheck -f 20 -d mydb

See gptext-state in the Management Utilities reference for help with additional gptext-state options.

The gptext.index_status() user-defined function reports the status of all Tanzu Greenplum Text indexes or a specified index.

SELECT * FROM gptext.index_status();

Specify an index name to report only the status of that index.

SELECT * FROM gptext.index_status('demo.twitter.message');

Adding and Dropping Replicas

The gptext-replica utility adds or drops a replica of a single index shard. Use the gptext.add_replica() and gptext.delete_replica() user-defined functions to perform the same tasks from within the database.

If a replica of a shard fails, use gptext-replica to add a new replica and then drop the failed replica to bring the index back to "Green" status.

gptext-replica add -i mydb.public.messages -s shard3

Here is the equivalent, using the gptext.add_replica() function:

SELECT * FROM gptext.add_replica('mydb.public.messages', shard3);

ZooKeeper determines where the replica will be located, but you can also specify the node where the replica is created:

gptext-replica add -i mydb.public.messages -s shard3 -n sdw3

In the gptext.add_replica() function, add the node name as a third argument.

To drop a replica, call gptext.delete_replica() with the name of the index, the name of the shard, and the name of the replica. You can find the name of the replica by calling gptext.index_status(index_name). The name is in the format core_noden. An optional -o flag specifies that the replica is to be deleted only if it is down.

gptext-replica drop -i mydb.public.messages -s shard3 -r core_node4 -o

Here is the equivalent of the above command using the gptext.delete_replica() user-defined function.

SELECT * FROM gptext.delete_replica('mydb.public.messages', 'shard3', 'core_node4', true);