To scale the microservices in your application, you need to deploy Tanzu Service Mesh Service Autoscaler by applying a Definition custom resource to each microservice to be scaled.

The Definition custom resource was created specifically for Tanzu Service Mesh Service Autoscaler to implement and customize the autoscaler. Enabling the autoscaler is easy if you are familiar with Kubernetes and the kubectl client. You can see the actions of the autoscaler (if enabled) from both the command line, using kubectl commands, and the Tanzu Service Mesh Console user interface, where graphs of service instance counts and metrics are displayed.

Autoscaling with the Definition custom resource is only available for services directly in cluster namespaces. The Definition custom resource is not available for services inside a global namespace.

You do not need to install the custom resource definition on your cluster. During onboarding, Tanzu Service Mesh Lifecycle Manager Operator automatically installs this custom resource definition on the cluster.

The use of the autoscaler is based on the assumption that resources are available for it to continue to scale out instances. If the cluster becomes resource-constrained, the cluster administrator must handle underlying cluster expansion manually or automatically through additional automation.

If you create a GNS-scoped autoscaling policy through UI and an org-scoped autoscaling policy through CRD for a target service, the GNS-scoped policy will override the org-scoped policy.

Prerequisites

Verify that your clusters use Tanzu Service Mesh Data Plane version 4.3.0 or later.

Procedure

What to do next

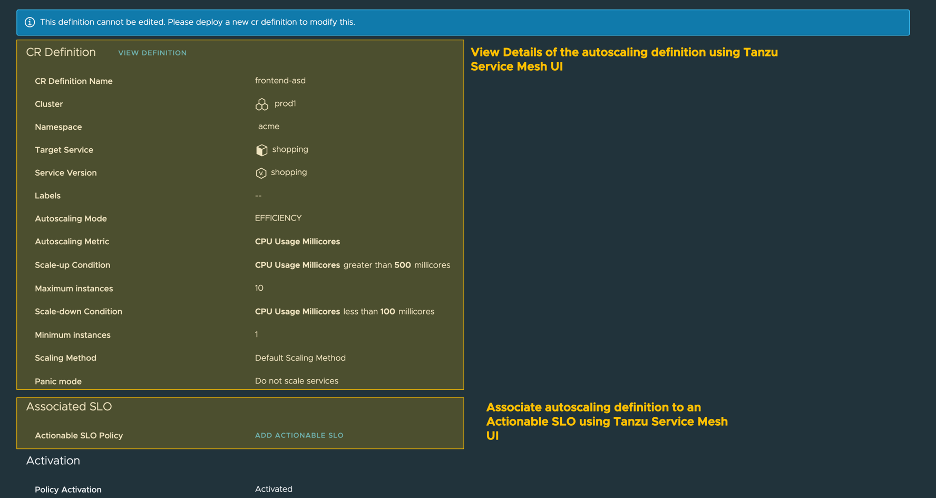

You can now view the Definition custom resource through the Tanzu Service Mesh Console UI. To see the autoscaling policy created using the Kubernetes CRD, perform these steps.

In the navigation panel on the left, click Autoscaling Policies.

To view the policy details, expand the autoscaling definition.

From the Tanzu Service Mesh Console UI, you can only view the autoscaling definition, but you cannot modify it.

To see autoscaling at work (if it has been enabled) in the Tanzu Service Mesh Console user interface, perform these steps.

In the navigation panel on the left, click Home.

On the Home page, click the Cluster Overview tab.

Click the cluster that has a service being autoscaled.

In the service topology graph, click a microservice.

Click the Performance tab.

In the upper-right corner of the tab, click Chart Settings to show service instances and other metrics of interest. You can select a maximum of four metrics to be displayed.

To access the autoscaling endpoints in the Tanzu Service Mesh API through the API Explorer, perform these steps.

On the bottom of the Tanzu Service Mesh Console UI, toward the right, click API Explorer.

On the Rest HTTP tab, expand the Autoscaling section and click the GET /v1alpha1/ autoscaling/configs endpoint.

This endpoint gets a list of all autoscaling policies and their details.

If you no longer need autoscaling, delete the Definition custom resource.

kubectl delete definition frontend-asd -n <namespace>

In the command above, frontend-asd is the name of the specific custom object of the Definition custom resource that is deleted.

For more examples and explanations, check out the sample apps placed in GitHub and test out the commands above on Tanzu Service Mesh with detailed instructions on how to deploy autoscaler to a sample app on cluster: