By leveraging the enhanced tenant capabilities of VMware Integrated OpenStack, vCloud NFV OpenStack Edition facilitates combining the edge and resource functionality into a single, collapsed pod. In combination, a smaller footprint design is possible. CSPs can use a two-pod design to gain operational experience with vCloud NFV OpenStack Edition. As demand grows, they scale up and scale out within the two-pod construct.

Initial two-pod deployment consists of one cluster for the Management pod and another cluster for the collapsed Edge / Resource pod. Clusters are vSphere objects for pooling virtual domain resources and managing resource allocation. Clusters scale up as needed by adding ESXi hosts, while pods scale up by adding new clusters to the existing pods. This design ensures that management boundaries are clearly defined, capacity is managed, and resources are allocated based on the functionality hosted by the pod. vCloud NFV OpenStack Edition VIM components allow for fine grained allocation and partitioning of resources to the workloads, regardless of the scaling method used.

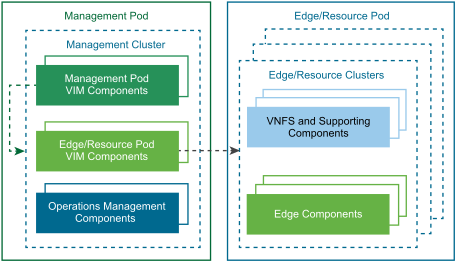

The below diagram shows a typical two-pod design with all management functions centrally located within the Management pod. Edge and resource functions are combined into the collapsed Edge / Resource pod. During initial deployment, two clusters of ESXi hosts are used: one for the Management pod, and the other for the collapsed Edge / Resource pod. Additional clusters can be added to each pod as the infrastructure is scaled up.

As best practice, begin the initial deployment with a minimum of four hosts per cluster within each pod, for a total of eight hosts. With initial four-host cluster deployment, a high degree of resiliency is enabled by using vSAN storage. At the same time, four hosts allow placing clustered management components such as vCenter Server active node, standby node, and witness node on separate hosts in the Management pod, creating a highly available Management pod design. This same design principle is used for clustered OpenStack components such as its database nodes.

The initial number and sizing of management components in the Management pod are pre-planned. As a result, the capacity requirement of the Management pod is expected to remain steady. Considerations when planning Management pod storage capacity must include operational headroom for VNF files, snapshots, backups, virtual machine templates, operating system images, and log files.

The collapsed edge/resource cluster sizing will change based on the VNF and networking requirements. When planning for the capacity of the Edge/Resource pod, tenants must work with the VNF vendors to gather requirements for the VNF service to be deployed. Such information is typically available from the VNF vendors in the form of deployment guides and sizing guidelines. These guidelines are directly related to the scale of the VNF service, for example to the number of subscribers to be supported. In addition, the capacity utilization of ESGs must be taken into consideration, especially when more instances of ESGs are deployed to scale up as the number of VNFs increases.

When scaling up the Edge / Resource pod by adding hosts to the cluster, newly added resources are automatically pooled, resulting in added capacity to compute cluster. New tenants can be provisioned to consume resources from the total available pooled capacity. Allocation settings for existing tenants must be modified before they can benefit from increased resource availability.

OpenStack compute nodes are added to scale out the Resource pod. Adding additional compute nodes can be done on the OpenStack Management Server using the vSphere Web Client extension. Due to leaf-and-spine network design, additional ESGs will continue to be deployed in the initial Edge cluster.

Within the Management pod, a set of components are deployed to manage the pod itself. These components include an instance of vCenter Server Appliance, Platform Services Controllers (PSCs), and an instance of NSX Manager and VMware Integrated OpenStack management components.

A 1:1 relationship is required between NSX Manager and vCenter Server. Ancillary components necessary for the healthy operation of the platform, such as Domain Name System (DNS), are also deployed in the Management pod. The tenant-facing Virtualized Infrastructure Manager (VIM) component, VMware Integrated OpenStack, is located in the Management pod, and is connected to the vCenter Server and its associated NSX Manager for networking. Also within the Management pod, a separate instance of vCenter Server is deployed to manage the Edge / Resource pod, which uses its own PSCs. Likewise, a separate NSX Manager is deployed to maintain the 1:1 relationship to the vCenter Server.

The Edge/Resource pod hosts all edge functions, VNFs, and VNFMs. The edge functions in the pod are NSX ESGs used to route traffic between different tenants and to provide North-South connectivity. Tenants can also deploy either edge routers or distributed routers for East-West connectivity. Edge VMs deployed as part of distributed logical router are used for Firewall and Dynamic routing.

Since both edge functions and VNF functions may be associated with a given tenant, resource utilization of the collapsed Edge / Resource pod must be carefully monitored. For example, an increase in the number of tenants and subsequent VNF functions deployed will inevitably expand the number of edge resources used. Operations Management discusses the approach to resource capacity management for this case. When resources are limited, collapsed Edge / Resource pod scale up operations must be carefully coordinated.

The VMware Integrated OpenStack layer of abstraction, and the ability to partition resources in vSphere, facilitate an important aspect of a shared NFV environment - secure multitenancy. Secure multitenancy ensures that more than one consumer of the shared NFV platform can coexist on the same physical infrastructure, without an awareness of, ability to influence, or ability to harm one another. With secure multitenancy resources are oversubscribed, yet fairly shared and guaranteed as necessary. This is the bedrock of the NFV business case. Implementation of secure multitenancy is described in Secure Multitenancy.