You deploy one or more TKG Service clusters in a vSphere Namespace. Configuration settings applied to the vSphere Namespace are inherited by each TKG Service cluster deployed there.

Configure Role Permissions for the vSphere Namespace

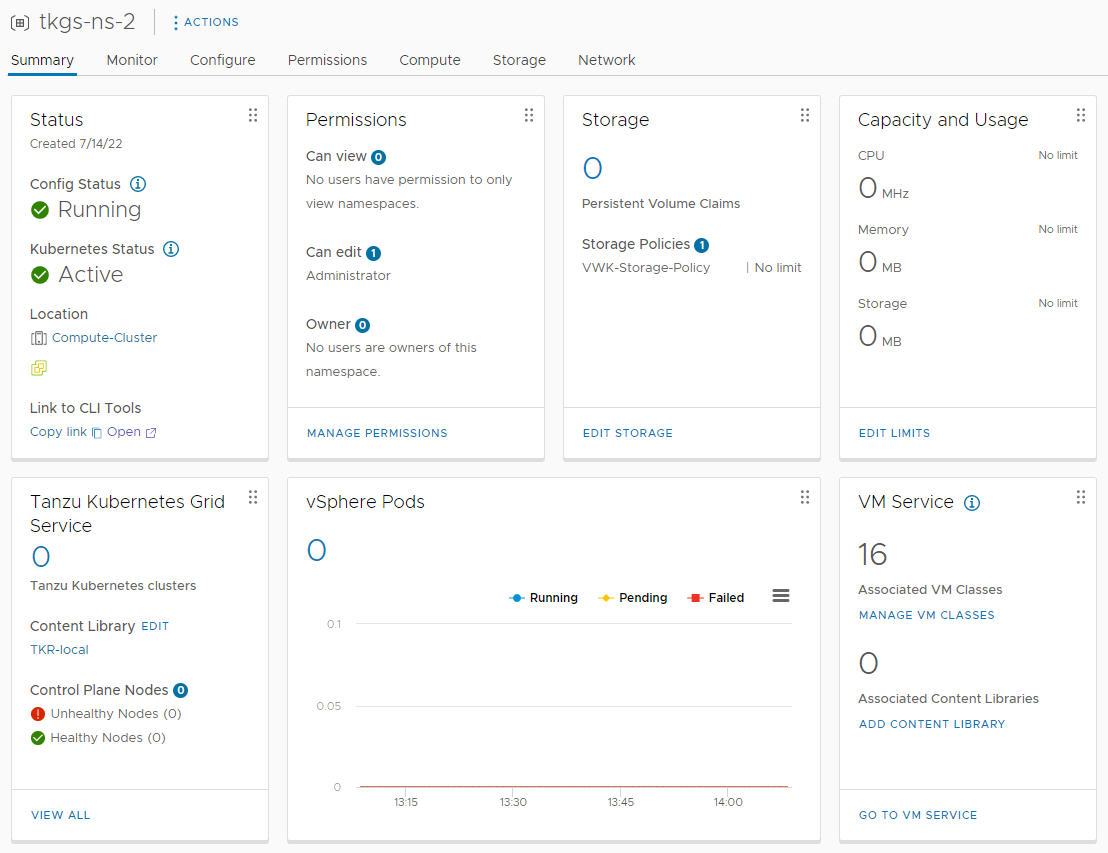

Role permissions are scoped to the vSphere Namespace. There are three role permissions that can be assigned to TKG cluster users and groups: Owner, Can Edit, and Can View. The table describes each role. For more information, see About Identity and Access Management for TKG Service Clusters.

If you are using vCenter Single Sign-On, all three roles are available. To assign SSO users and groups to a vSphere Namespace, see Configure vSphere Namespace Permissions for vCenter Single Sign-On Users and Groups.

If you are using an external OIDC provider, the Owner role permission is not available. To assign OIDC users and groups to a vSphere Namespace, see Configure vSphere Namespace Permissions for External Identity Provider Users and Groups.

| Role | Description |

|---|---|

| Owner |

The Owner role permission lets assigned users and groups administer vSphere Namespace objects using kubectl, and operate TKG clusters. See Enable vSphere Namespace Creation Using Kubectl. |

| Can Edit |

The Can Edit role permission lets assigned users and groups view vSphere Namespace objects, and operate TKG clusters. A vCenter Single Sign-On user/group granted the Can Edit permission is bound to the Kubernetes cluster-admin role for each TKG cluster deployed in that vSphere Namespace. |

| Can View |

The Can View role permission lets assigned users and groups view vSphere Namespace objects.

Note: There is no equivalent read-only role in Kubernetes that the

Can View permission can be bound to. To grant cluster access to Kubernetes users, see

Grant Developers vCenter SSO Access to TKG Service Clusters.

|

Configure Persistent Storage for the vSphere Namespace

You can assign one or more vSphere storage policies to a vSphere Namespace. An assigned storage policy controls datastore placement of persistent volumes in the vSphere storage environment.

Typically a vSphere administrator defines a vSphere storage policy. If you are using vSphere Zones, the storage policy must be configured with the Zonal topology. See Create a vSphere Storage Policy for TKG Service Clusters.

- Select and the target vSphere Namespace.

- From the Storage tile, select Add Storage.

- Select one or more storage policies from the available options.

For each vSphere storage policy you assign to a vSphere Namespace, the system creates two matching Kubernetes storage classes in that vSphere Namespace. These storage classes are replicated to each TKG cluster deployed in that vSphere Namespace. See Using Storage Classes for Persistent Volumes.

Set Capacity and Usage Limits for the vSphere Namespace

When you configure a vSphere Namespace, a resource pool for the vSphere Namespace is created on vCenter Server. By default this resource pool is configured without any capacity and usage quota; resources are limited by the infrastructure.

| CPU | The amount of CPU resources to reserve for the vSphere Namespace. |

| Memory | The amount of memory to reserve for the vSphere Namespace. |

| Storage | The total amount of storage space to reserve for the vSphere Namespace. |

| Storage Policy Limit | Set the amount of storage dedicated individually to each of the storage policies that are associated with the vSphere Namespace. |

Typically, for TKG cluster deployments, you do not need to configure resource quota on the vSphere Namespace. If you do assign quota limits, it is important to understand their potential impact on TKG clusters deployed there.

- CPU and Memory Limits

-

CPU and Memory limits configured on the vSphere Namespace have no bearing on a TKG cluster deployed there, if the TKG cluster nodes are using the guaranteed VM class type. However, if the TKG cluster nodes are using the best effort VM class type, the CPU and Memory limits can impact the TKG cluster.

Because the best effort VM class type allows resources to be overcommitted, you can run out of resources if you have set CPU and memory limits on the vSphere Namespace where you are provisioning TKG clusters. If contention occurs and the TKG cluster control plane is impacted, the cluster may stop running. For this reason you should always use the guaranteed VM class type for production clusters. If you cannot use the guaranteed VM class type for all production nodes, at a minimum you should use guaranteed for the control plane nodes.

- Storage and Storage Policy Limits

-

A Storage limit configured on the vSphere Namespace determines the overall amount of storage that is available to the vSphere Namespace for all TKG clusters deployed there.

A Storage Policy limit configured on the vSphere Namespace determines the amount of storage available for that storage class for each TKG cluster where the storage class is replicated.

Some workloads have minimum storage requirements. See #GUID-9CA5FE35-8DA5-4F76-BD7F-81059CCA602E, for example.

Associate the TKR Content Library with the TKG Service

To provision TKG clusters you associate the TKG Service with a content library. To create a content library for hosting TKr images, see Administering Kubernetes Releases for TKG Service Clusters.

- Select (select the Supervisor instance).

- Select .

- Select .

- Select a TKR content library.

- Navigate to the vSphere Namespace and select Manage Namespace.

- Verify that the selected Content Library appears in the Tanzu Kubernetes Grid Service configure pane.

It is important to understand that the TKR content library is not namespace-scoped. All vSphere Namespaces use same TKR content library that is configured for the TKG Service (TKGS). Editing the TKR content library for TKGS will apply to each vSphere Namespace.

Associate VM Classes with the vSphere Namespace

vSphere IaaS control plane provides several default VM classes, and you can create your own.

To provision a TKG cluster, associate one or more VM classes with the target vSphere Namespace. Bound classes are available for use by TKG cluster nodes deployed in that vSphere Namespace.

- Select and the target vSphere Namespace.

- For the VM Service tile, select Add VM Class.

- Select each of the VM classes you want to add.

- To add the default VM classes, select the check box in the table header on page 1 of the list, navigate to page 2 and select the check box in the table header on that page. Verify that all classes are selected.

- To create a custom class, click Create New VM Class. Refer to the VM Services documentation for instructions.

- Click OK to complete the operation.

- Confirm that the classes are added. The VM Service tile shows Manage VM Classes.

Verify vSphere Namespace Configuration

To verify vSphere Namespace configuration using kubectl, see Verify vSphere Namespace Readiness for Hosting TKG Service Clusters.