As a cloud administrator, you can utilize your VMware Cloud Foundation stack to manage GPU-enabled infrastructure and AI/ML workload domains. In VMware Aria Automation, you can set up and provide GPU-enabled deep learning virtual machines (DL VM) and Tanzu Kubernetes Grid (TKG) clusters as catalog items that data scientists and DevOps teams in your organization can request in the self-service Automation Service Broker catalog.

What is VMware Private AI Foundation?

VMware Private AI Foundation with NVIDIA provides a platform for provisioning AI workloads on VMware Cloud Foundation with NVIDIA GPUs. In addition, running AI workloads based on NVIDIA GPU Cloud (NGC) containers is specifically validated by VMware by Broadcom. To learn more, see What is VMware Private AI Foundation with NVIDIA.

Private AI Automation Services is the collective name for all VMware Private AI Foundation features that are available in VMware Aria Automation.

To get started with Private AI Automation Services, you run the Catalog Setup Wizard in VMware Aria Automation. The wizard helps you connect VMware Private AI Foundation to VMware Aria Automation.

How does the Catalog Setup Wizard work?

- Add a cloud account. Cloud accounts are the credentials that are used to collect data from and deploy resources to your vCenter instance.

- Add an NVIDIA license.

- Select content to add to the Automation Service Broker catalog.

- Create a project. The project links your users with cloud account regions, so that they can deploy cloud templates with networks and storage resources to your vCenter instance.

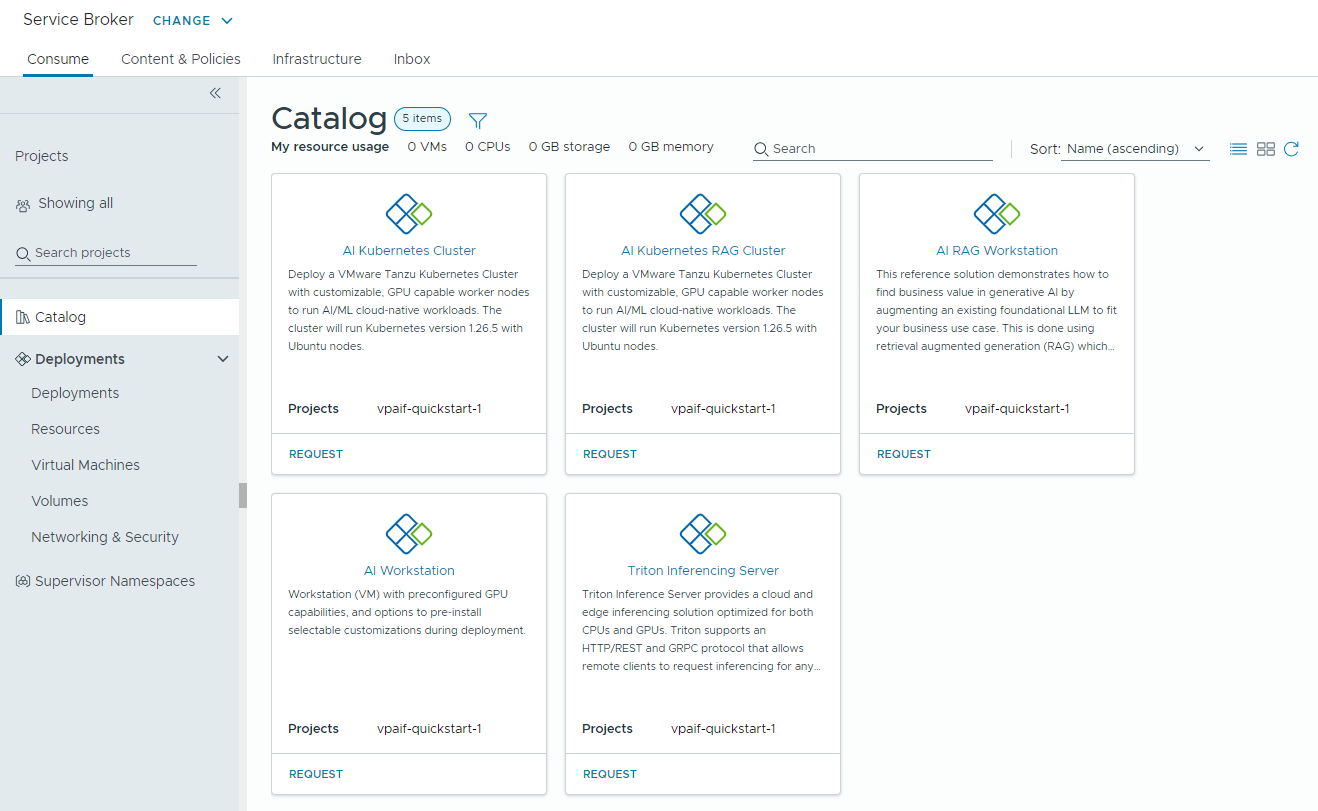

- AI Workstation – a GPU-enabled virtual machine that can be configured with desired vCPU, vGPU, memory, and the option to pre-install AI/ML frameworks like PyTorch, CUDA Samples, and TensorFlow.

- AI RAG Workstation – a GPU-enabled virtual machine with Retrieval Augmented Generation (RAG) reference solution.

- Triton Inference Server – a GPU-enabled virtual machine with Triton Inference Server.

- AI Kubernetes Cluster – a VMware Tanzu Kubernetes Grid Cluster with GPU-capable worker nodes to run AI/ML cloud-native workloads.

- AI Kubernetes RAG Cluster – a VMware Tanzu Kubernetes Grid Cluster with GPU-capable worker nodes to run a reference RAG solution.

You can run the wizard again multiple times if you need to change any of the settings that you provided, like changes in licensing, or if you want to create AI catalog items for other projects. Each time you run the wizard, five new catalog items are created for you in addition to any previously created items.

You can modify the templates for the catalog items that the wizard created to meet the specific needs of your organization.

Before you begin

- Verify that you are running VMware Aria Automation 8.18.

- Verify that you are running VMware Cloud Foundation 5.1.1 or later, which includes vCenter 8.0 Update U2b or later.

- Verify that you have a vCenter cloud account in VMware Aria Automation.

- Verify that you have an NVIDIA GPU Cloud Enterprise organization with a premium cloud service subscription.

- Verify that you have a configured GPU-enabled Supervisor cluster via workload management..

- Configure VMware Aria Automation for VMware Private AI Foundation with NVIDIA. See Set Up VMware Aria Automation for VMware Private AI Foundation with NVIDIA.

- Complete the VMware Cloud Foundation Quickstart before running the Catalog Setup Wizard. Your SDDC and Supervisor clusters must be registered with VMware Aria Automation. See How do you get started with VMware Aria Automation using the VMware Cloud Foundation Quickstart.

- Verify that you have generated the client configuration token from the NVIDIA licensing server and that you have your NVIDIA NGC Portal API key. The NVIDIA NGC Portal Access key is used to download and install vGPU drivers.

- Configure Single Sign-On (SSO) for Cloud Consumption Interface (CCI). See Setting Up Single Sign-On for CCI.

- Verify that you are subscribed to the content library at https://packages.vmware.com/dl-vm/lib.json.

Procedure

- After you install VMware Aria Automation and log in for the first time, click Launch Quickstart.

- On the Private AI Automation Services card, click Start.

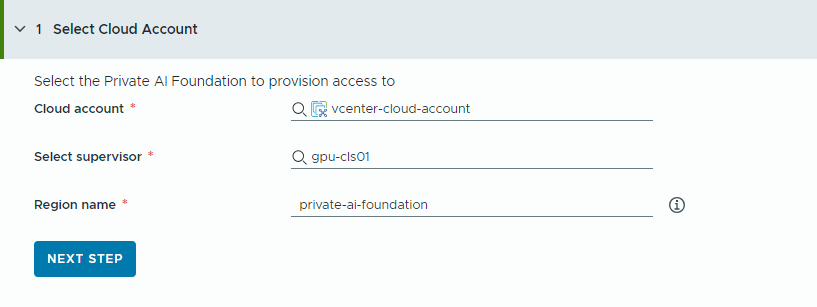

- Select the cloud account to provision access to.

Remember that all values here are use case samples. Your account values depend on your environment.

- Select a vCenter cloud account.

- Select a GPU-enabled supervisor.

- Enter a region name.

A region is automatically selected if the supervisor is already configured with a region.

If the supervisor is not associated with a region, you add one in this step. Consider using a descriptive name for your region that helps your users distinguish GPU-enabled regions from other available regions.

- Click Next Step.

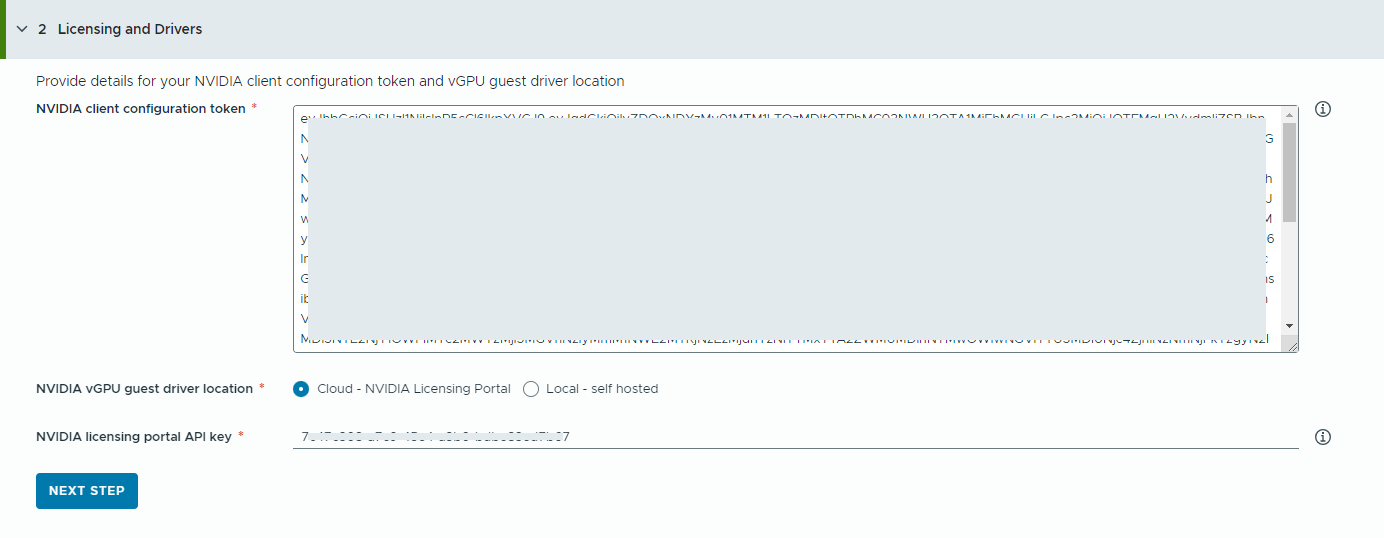

- Provide information about your NVIDIA license server.

- Copy and paste the contents of the NVIDIA client configuration token.

The client configuration token is needed to enable full capabilities of the vGPU driver.

- Select the location of the NVIDIA vGPU drivers.

- Cloud – the NVIDIA vGPU driver is hosted on the NVIDIA Licensing Portal.

You must provide the NVIDIA Licensing Portal API key, which is used to evaluate if a user has the right entitlement to download the NVIDIA vGPU drivers. The API key must be a UUID.

Note: The API key that you generate from the NVIDIA Licensing Portal is not the same as the NVAIE API Key. - Local – the NVIDIA vGPU driver is hosted on-premises and is accessed from а private network.

You must provide the location of the vGPU guest drivers for VMs.

For air-gapped environments, the vGPU driver must be avaiable on your private network or data center.

- Cloud – the NVIDIA vGPU driver is hosted on the NVIDIA Licensing Portal.

- Click Next Step.

- Copy and paste the contents of the NVIDIA client configuration token.

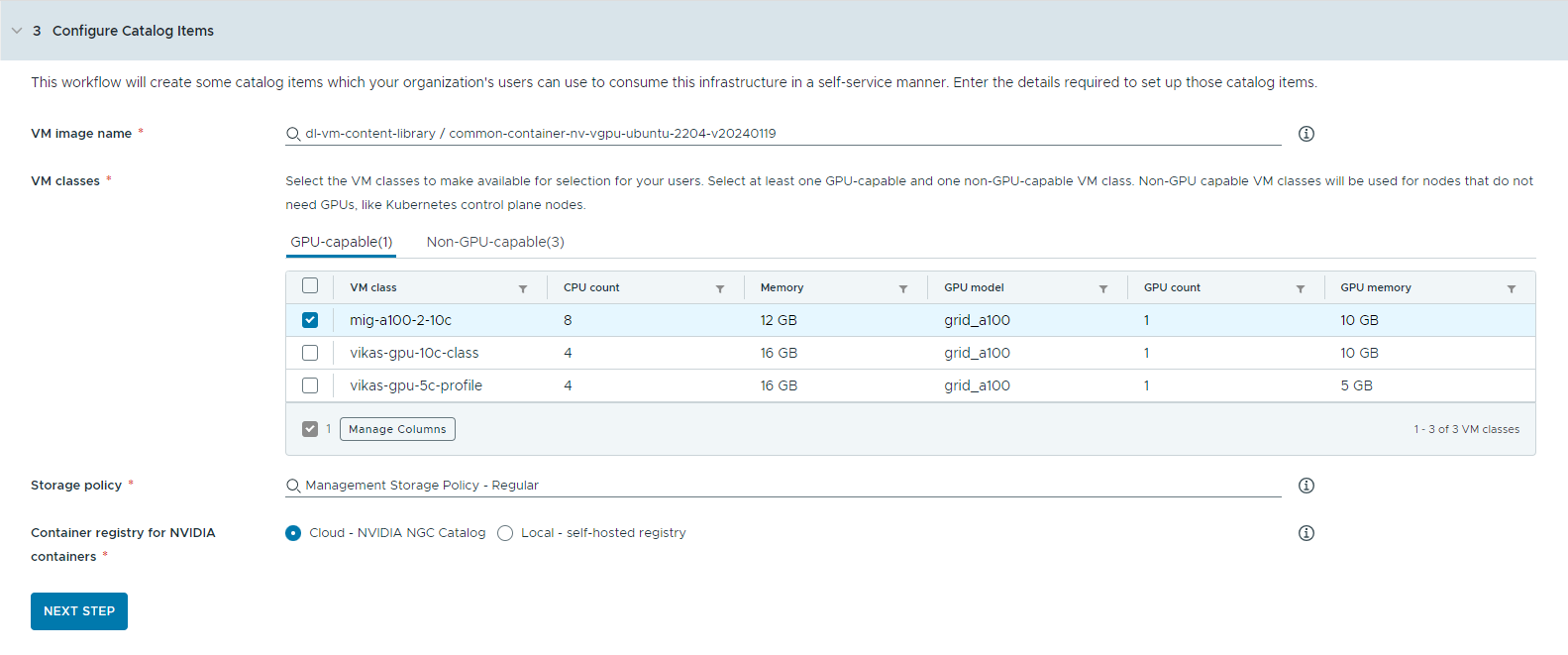

- Configure the catalog items.

- Select the content library that contains the deep learning VM image.

You can access only one content library at a time. If the content library contains Kubernetes images, those images are filtered out.

- Select the VM image you want to use to create the workstation VM.

- Select the VM classes you want to make available to your catalog users.

You must add at least one GPU-capable and one non-GPU-capable class.

- GPU-enabled VM classes are used for the deep learning VM and for the worker nodes of the TKG cluster. When the catalog item is deployed, the TKG cluster is created with the selected VM classes.

- Non-GPU-capable nodes are required to run the Kubernetes control planes.

- Select the storage policy to apply to the virtual machines.

- Specify the container registry where you want to pull NVIDIA GPU Cloud resources.

- Cloud – the container images are pulled from the NVIDIA NGC catalog.

- Local – for air-gapped environments, the containers are pulled from a private registry.

You must provide the location of the self-hosted registry. If the registry requires authentication, you must also provide login credentials.

You can use Harbor as a local registry for container images from the NVIDIA NGC catalog. See Setting Up a Private Harbor Registry in VMware Private AI Foundation with NVIDIA.

- (Optional) Configure a proxy server.

In environments without direct Internet access, the proxy server is used to download the vGPU driver and pull the non-RAG AI Workstation containers.

Note: Support for air-gapped environments is available for the AI Workstation and Triton Inference Server catalog items. The AI RAG Workstation and AI Kubernetes Cluster items do not support air-gapped environments and need Internet connectivity. - Click Next Step.

- Select the content library that contains the deep learning VM image.

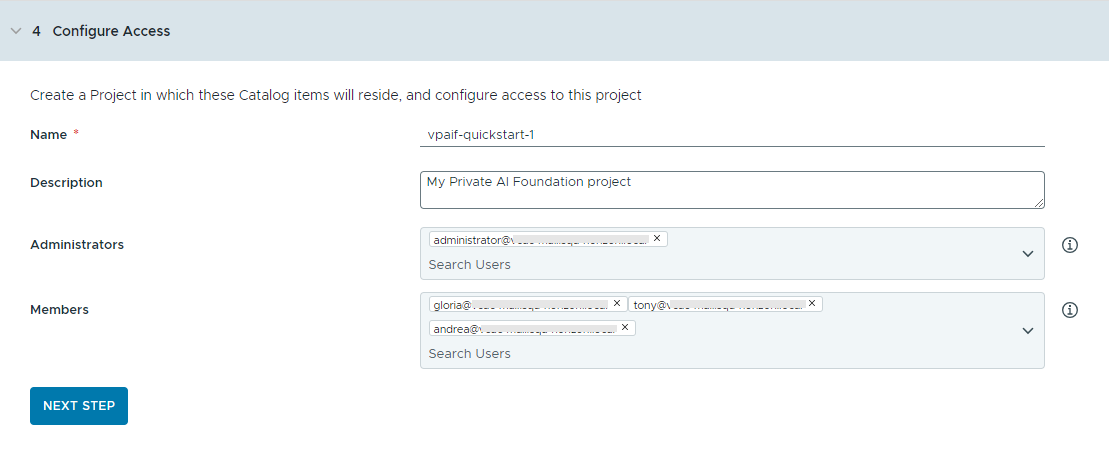

- Configure access to the catalog items by creating a project and assigning users.

Projects are used to manage people, assigned resources, cloud templates, and deployments.

- Enter a name and description for the project.

The project name must contain only lowercase alphanumeric characters or hyphens (-).

- To make the catalog items available to others, add an Administrator and Members.

Administrators have more permissions than the members have. For more information, see What are the VMware Aria Automation user roles.

- Click Next Step.

- Enter a name and description for the project.

- Verify your configuration on the Summary page.

Consider saving the details for your configuration before running the wizard.

- Click Run Quickstart.

Results

Five catalog items – AI Workstation, AI RAG Workstation, Triton Inferencing Server, AI Kubernetes Cluster, and AI Kubernetes RAG Cluster, are created in the Automation Service Broker catalog and users in your organization can now deploy them.

What to do next

- Verify that the templates are available in the catalog to the members of the selected projects with whom you shared the content and monitor the provisioning process to ensure successful deployment. See How do I deploy PAIF catalog items.

- If you want to control how long a deployment can exist, create a lease. See Setting up Automation Service Broker policies.

- To modify user inputs at request time, you can create a custom form. See Customize an Automation Service Broker icon and request form.

Troubleshooting

- If the Catalog Setup Wizard fails, run the wizard again for a different project.