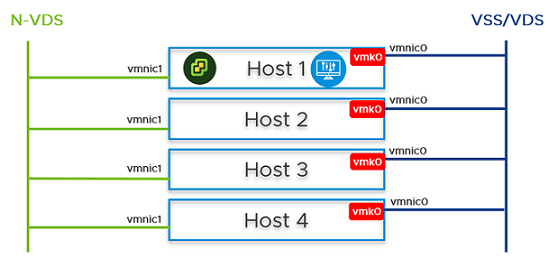

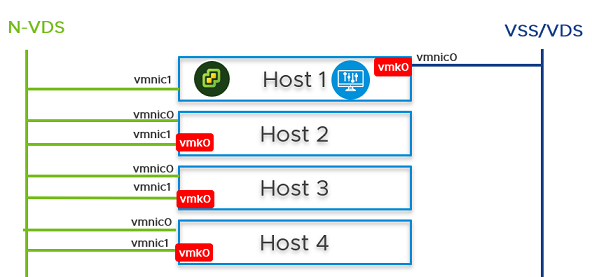

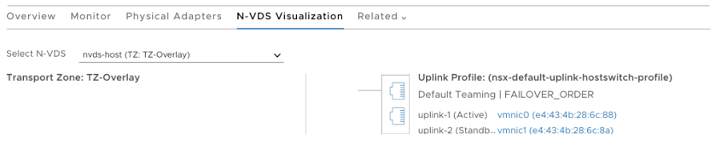

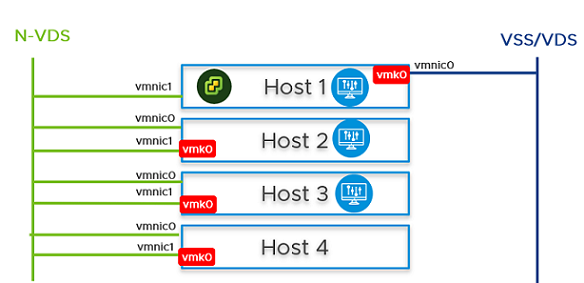

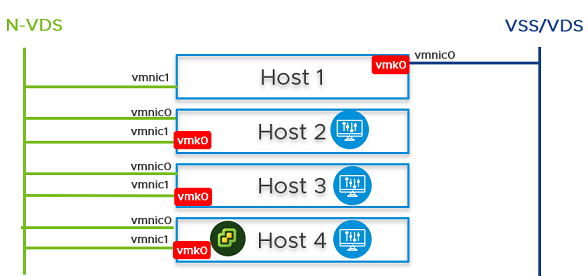

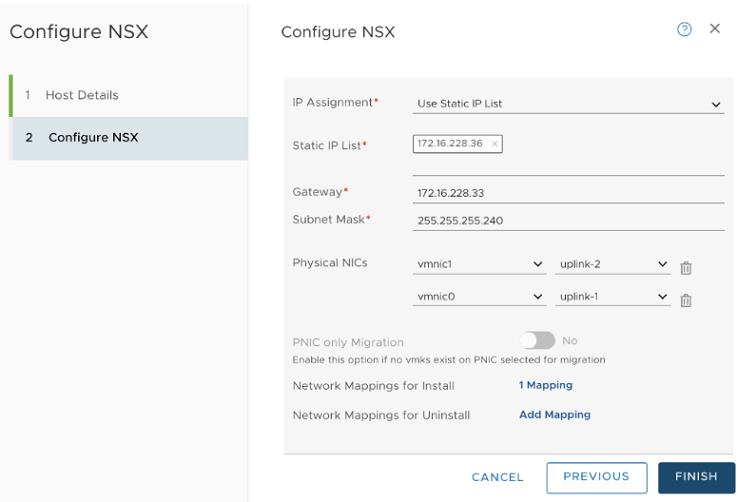

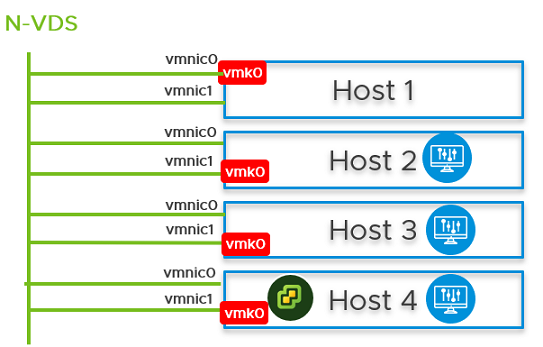

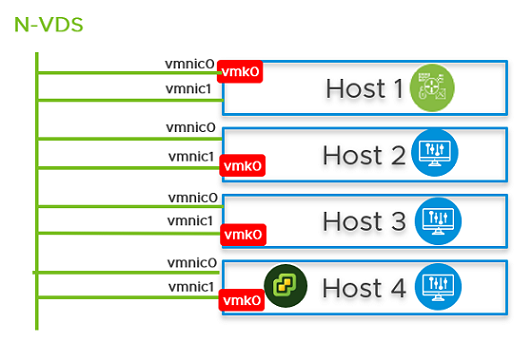

You can configure NSX Manager, host transport nodes, and NSX Edge VMs on a single cluster. Each host in the cluster provides two physical NICs that are configured for NSX.

Prerequisites

- All the hosts must be part of a vSphere cluster.

- Each host has two physical NICs enabled.

- Register all hosts to a VMware vCenter.

- Verify on the VMware vCenter that shared storage is available to be used by the hosts.

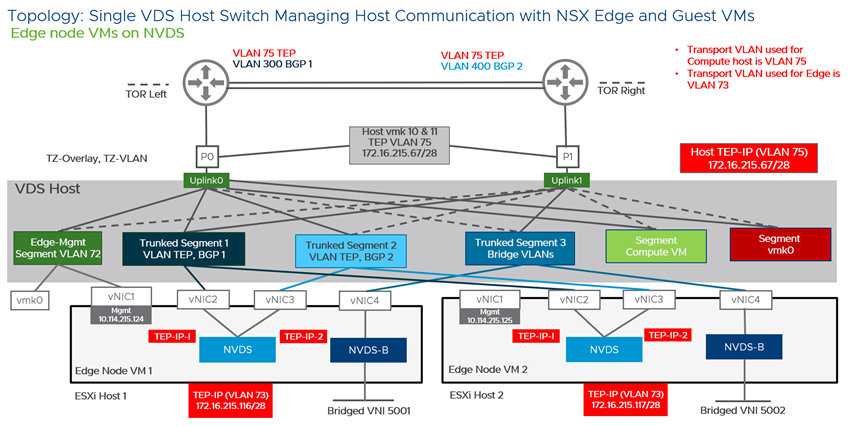

- Starting with NSX 3.1, the TEP of an NSX Edge VM can communicate directly with the TEP of the host on which the NSX Edge VM is running. Before NSX 3.1, the NSX Edge VM TEP had to be in a different VLAN than the host TEP.

Note: Alternatively, you can deploy the configuration described in this topic by using vSphere Distributed Switches. With vSphere Distributed Switches configured on hosts, the procedure is simple. It does not involve vSphere Distributed Switch to N-VDS migration and NSX Manager deployment on NSX distributed virtual port groups. But, the NSX Edge VM must connect to a VLAN segment to be able to use the same VLAN as the ESXi host TEP.

-

Starting with NSX 3.2.2, you can enable multiple NSX Managers to manage a single VMware vCenter. See Multiple NSX Managers Managing a Single VMware vCenter.

After you configure a collapsed cluster, if you enable the VMware vCenter to work with multiple NSX Managers (multiple NSX Managers are registered to the same VMware vCenter), you cannot deploy new NSX Manager nodes to the cluster. The workaround is to create a new cluster and deploy NSX Manager nodes.

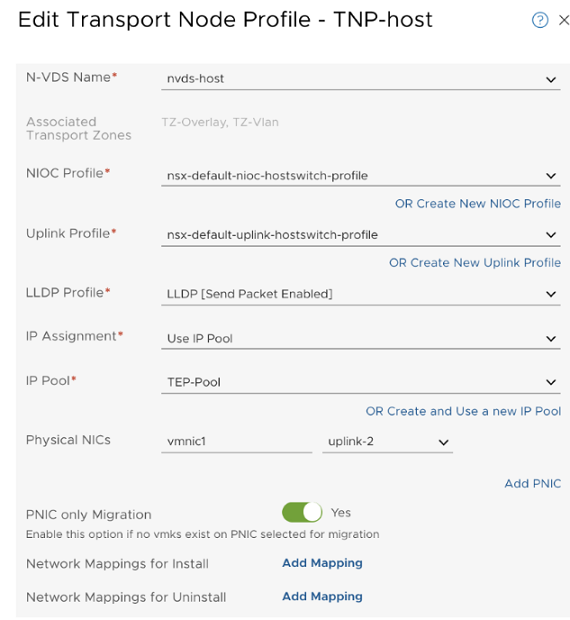

Note: Deploy the fully collapsed single vSphere cluster topology starting with NSX 2.4.2 or 2.5 release.The topology referenced in this procedure has:

- vSAN configured with the hosts in the cluster.

- A minimum of two physical NICs per host.

- vMotion and Management VMkernel interfaces.

- Starting with NSX 4.0, transport node hosts supports only VDS switch. However, NSX Edge is configured using an NVDS switch.