Before you install VMware Tanzu Kubernetes Grid Integrated Edition on vSphere with NSX-T integration, you must plan the environment as described in this topic.

Prerequisites

Familiarize yourself with the following VMware documentation:

- vSphere, vCenter, vSAN, and ESXi documentation

- NSX-T Data Center documentation

- NSX Container Plugin (NCP) documentation

Familiarize yourself with the following related documentation:

Review the following Tanzu Kubernetes Grid Integrated Edition documentation:

- vSphere with NSX-T Version Requirements

- Hardware Requirements for Tanzu Kubernetes Grid Integrated Edition on vSphere with NSX-T

- VMware Ports and Protocols on the VMware site.

- Network Objects Created by NSX-T for Tanzu Kubernetes Grid Integrated Edition

Understand Component Interactions

Tanzu Kubernetes Grid Integrated Edition on vSphere with NSX-T requires the following component interactions:

- vCenter, NSX-T Manager Nodes, NSX-T Edge Nodes, and ESXi hosts must be able to communicate with each other.

- The BOSH Director VM must be able to communicate with vCenter and the NSX-T Management Cluster.

- The BOSH Director VM must be able to communicate with all nodes in all Kubernetes clusters.

- Each Tanzu Kubernetes Grid Integrated Edition-provisioned Kubernetes cluster deploys the NSX-T Node Agent and the Kube Proxy that run as BOSH-managed processes on each worker node.

- NCP runs as a BOSH-managed process on the Kubernetes control plane node. In a multi-control plane node deployment, the NCP process runs on all control plane nodes, but is active only on one control plane node. If the NCP process on an active control plane node is unresponsive, BOSH activates another NCP process.

Plan Deployment Topology

Review the Deployment Topologies for Tanzu Kubernetes Grid Integrated Edition on vSphere with NSX-T. The most common deployment topology is the NAT topology. Decide which deployment topology you will implement, and plan accordingly.

Plan Network CIDRs

Before you install Tanzu Kubernetes Grid Integrated Edition on vSphere with NSX-T, plan for the CIDRs and IP blocks that you are using in your deployment.

Plan for the following network CIDRs in the IPv4 address space according to the instructions in the VMware NSX-T documentation.

-

VTEP CIDRs: One or more of these networks host your GENEVE Tunnel Endpoints on your NSX Transport Nodes. Size the networks to support all of your expected Host and Edge Transport Nodes. For example, a CIDR of

192.168.1.0/24provides 254 usable IPs. -

TKGI MANAGEMENT CIDR: This small network is used to access Tanzu Kubernetes Grid Integrated Edition management components such as Ops Manager, BOSH Director, and Tanzu Kubernetes Grid Integrated Edition VMs as well as the Harbor Registry VM if deployed. For example, a CIDR of

10.172.1.0/28provides 14 usable IPs. For the No-NAT deployment topologies, this is a corporate routable subnet /28. For the NAT deployment topology, this is a non-routable subnet /28, and DNAT needs to be configured in NSX-T to access the Tanzu Kubernetes Grid Integrated Edition management components. -

TKGI LB CIDR: This network provides your load balancing address space for each Kubernetes cluster created by Tanzu Kubernetes Grid Integrated Edition. The network also provides IP addresses for Kubernetes API access and Kubernetes exposed services. For example,

10.172.2.0/24provides 256 usable IPs. This network is used when creating theip-pool-vipsdescribed in Creating NSX-T Objects for Tanzu Kubernetes Grid Integrated Edition, or when the services are deployed. You enter this network in the Floating IP Pool ID field in the Networking pane of the Tanzu Kubernetes Grid Integrated Edition tile.

Plan IP Blocks

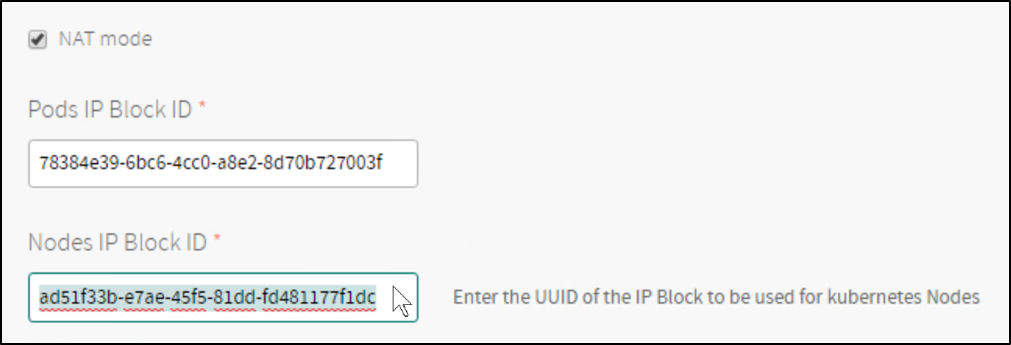

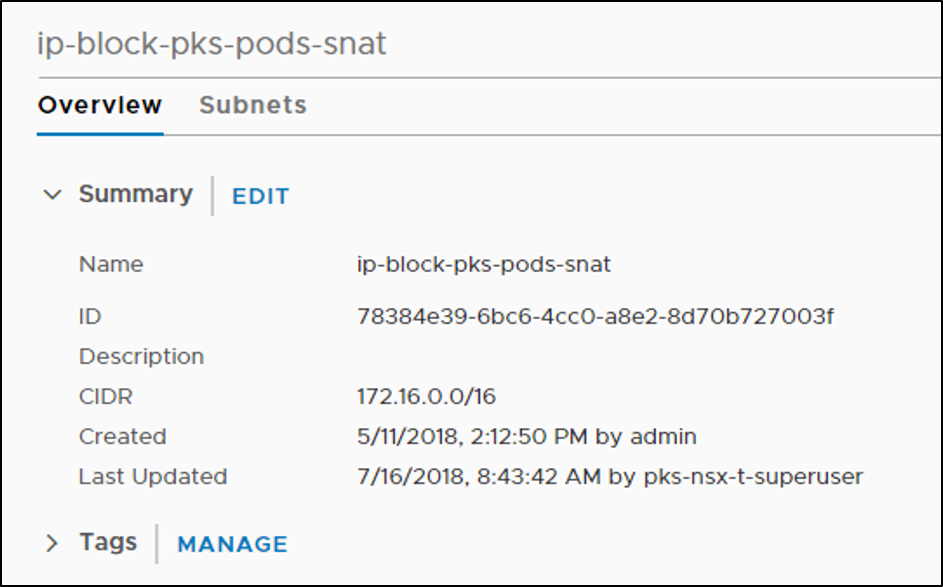

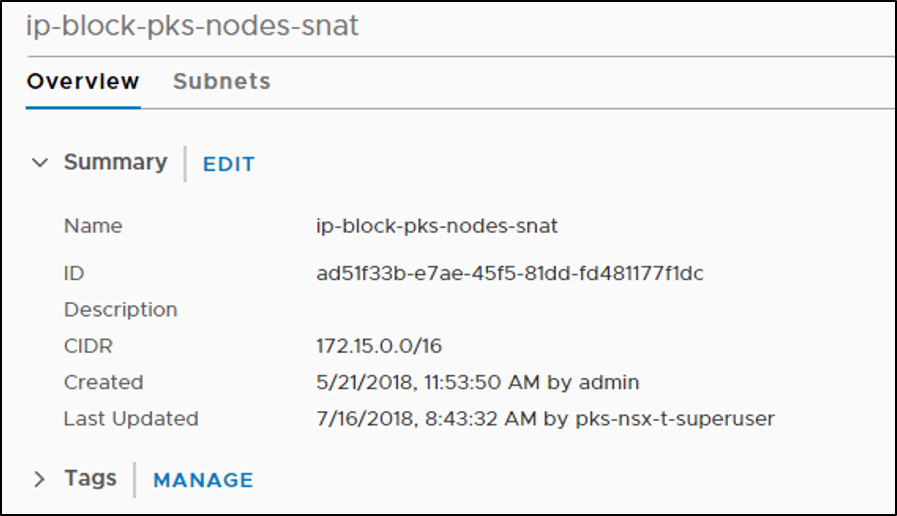

When you install Tanzu Kubernetes Grid Integrated Edition on NSX-T, you are required to specify the Pods IP Block ID and Nodes IP Block ID in the Networking pane of the Tanzu Kubernetes Grid Integrated Edition tile. These IDs map to the two IP blocks you must configure in NSX-T: the Pods IP Block for Kubernetes pods, and the Node IP Block for Kubernetes nodes (VMs). For more information, see the Networking section of Installing Tanzu Kubernetes Grid Integrated Edition on vSphere with NSX-T Integration.

Pods IP Block

Each time a Kubernetes namespace is created, a subnet from the Pods IP Block is allocated. The subnet size carved out from this block is /24, which means a maximum of 256 pods can be created per namespace. When a Kubernetes cluster is deployed by Tanzu Kubernetes Grid Integrated Edition, by default 3 namespaces are created. Often additional namespaces will be created by operators to facilitate cluster use. As a result, when creating the Pods IP Block, you must use a CIDR range larger than /24 to ensure that NSX has enough IP addresses to allocate for all pods. The recommended size is /16. For more information, see Creating NSX-T Objects for Tanzu Kubernetes Grid Integrated Edition.

Note: By default, Pods IP Block is a block of non-routable, private IP addresses. After you deploy Tanzu Kubernetes Grid Integrated Edition, you can define a network profile that specifies a routable IP block for your pods. The routable IP block overrides the default non-routable Pods IP Block when a Kubernetes cluster is deployed using that network profile. For more information, see Routable Pods in Using Network Profiles (NSX-T Only).

Nodes IP Block

Each Kubernetes cluster deployed by Tanzu Kubernetes Grid Integrated Edition owns a /24 subnet. To deploy multiple Kubernetes clusters, set the Nodes IP Block ID in the Networking pane of the Tanzu Kubernetes Grid Integrated Edition tile to larger than /24. The recommended size is /16. For more information, see Creating NSX-T Objects for Tanzu Kubernetes Grid Integrated Edition.

Note: You can use a smaller nodes block size for no-NAT environments with a limited number of routable subnets. For example, /20 allows up to 16 Kubernetes clusters to be created.

Reserved IP Blocks

Tanzu Kubernetes Grid Integrated Edition reserves several CIDR blocks and IP addresses for internal use. When deploying TKGI, do not use these CIDR blocks or IP addresses.

- Each Kubernetes cluster uses the

10.100.200.0/24subnet for Kubernetes services. Do not use this IP range for the Nodes IP Block. - Docker is installed on each Tanzu Kubernetes Grid Integrated Edition worker node and is assigned the

172.17.0.0/16network interface. Do not use this CIDR range for any TKGI component, including Ops Manager, BOSH Director, the TKGI API VM, the TKGI DB VM, and the Harbor Registry VM. - The Tanzu Kubernetes Grid Integrated Edition Management Console runs the Docker daemon and reserves for it the

172.18.0.0/16subnet and the gateway172.18.0.1. Do not use this CIDR range or IP address unless you customize them during OVA configuration. - The Harbor Registry requires IP blocks in the range

172.20.0.0/16. Do not use this CIDR range, unless you change it in the Harbor tile configuration.

Note: The TKGI Management Console VM also reserves an unused docker0 interface on 172.17.0.0/16. This cannot be customized.

The table below summarizes the IP blocks reserved by TKGI. Do not use these when deploying TKGI components.

| IP Address Block | Reserved for | TKGI Component | Customizable |

|---|---|---|---|

10.100.200.0/24 |

Kubernetes services | Nodes IP Block | No |

172.17.0.0/16 |

Docker daemon | Worker node VM | No |

172.17.0.0/16 |

Docker daemon | Management Console VM | No (unused) |

172.18.0.0/16 |

Docker daemon | Management Console VM | Yes (OVA configuration) |

172.18.0.1 |

Docker gateway | Management Console VM | Yes (OVA configuration) |

172.20.0.0/16 |

Docker daemon | Harbor Registry VM | Yes (Harbor tile > Networking) |

Gather Other Required IP Addresses

To install Tanzu Kubernetes Grid Integrated Edition on vSphere with NSX-T, you will need to know the following:

- Subnet name where you will install Tanzu Kubernetes Grid Integrated Edition

- VLAN ID for the subnet

- CIDR for the subnet

- Netmask for the subnet

- Gateway for the subnet

- DNS server for the subnet

- NTP server for the subnet

- IP address and CIDR you plan to use for the NSX-T Tier-0 Router