Non-Volatile Memory (NVM) storage devices that use persistent memory have become popular in data centers. To connect to local and networked NVM devices, ESXi uses the NVM Express (NVMe) protocol, a standardized protocol designed specifically for high-performance multi-queue communication with NVM devices.

VMware NVMe Concepts

Before you begin working with NVMe storage in the ESXi environment, learn about basic NVMe concepts.

- NVM Express (NVMe)

- NVMe is a method for connecting and transferring data between a host and a target storage system. NVMe is designed for use with faster storage media equipped with non-volatile memory, such as flash devices. This type of storage can achieve low latency, low CPU usage, and high performance, and generally serves as an alternative to SCSI storage.

- NVMe Transports

- The NVMe storage can be directly attached to a host using a PCIe interface or indirectly through different fabric transports. VMware NVMe over Fabrics (NVMe-oF) provides a distance connectivity between a host and a target storage device on a shared storage array.

- NVMe Namespaces

- In the NVMe storage array, a namespace is a storage volume backed by some quantity of non-volatile memory. In the context of ESXi, the namespace is analogous to a storage device, or LUN. After your ESXi host discovers the NVMe namespace, a flash device that represents the namespace appears on the list of storage devices in the vSphere Client. You can use the device to create a datastore and store virtual machines.

- NVMe Controllers

- A controller is associated with one or several NVMe namespaces and provides an access path between the ESXi host and the namespaces in the storage array. To access the controller, the host can use two mechanisms, controller discovery and controller connection. For information, see Add Controllers for NVMe over Fabrics.

- Controller Discovery

- With this mechanism, the ESXi host first contacts a discovery controller. The discovery controller returns a list of available controllers. After you select a controller for your host to access, all namespaces associated with this controller become available to your host.

- Controller Connection

- Your ESXi host connects to the controller that you specify. All namespaces associated with this controller become available to your host.

- NVMe Subsystem

- Generally, an NVMe subsystem is a storage array that might include several NVMe controllers, several namespaces, a non-volatile memory storage medium, and an interface between the controller and non-volatile memory storage medium. The subsystem is identified by a subsystem NVMe Qualified Name (NQN).

- VMware High-Performance Plug-in (HPP)

- By default, the ESXi host uses the HPP to claim the NVMe-oF targets. When selecting physical paths for I/O requests, the HPP applies an appropriate Path Selection Scheme (PSS). For information about the HPP, see VMware High Performance Plug-In and Path Selection Schemes. To change the default path selection mechanism, see Change the Path Selection Policy.

Basic VMware NVMe Architecture and Components

ESXi supports local NVMe over PCIe storage and shared NVMe-oF storage, such as NVMe over Fibre Channel, NVMe over RDMA (RoCE v2), and NVMe over TCP.

- VMware NVMe over PCIe

-

In this configuration, your

ESXi host uses a PCIe storage adapter to access one or more local NVMe storage devices. After you install the adapter on the host, the host discovers available NVMe devices, and they appear in the list of storage devices in the

vSphere Client.

- VMware NVMe over Fibre Channel

-

This technology maps NVMe onto the Fibre Channel protocol to enable the transfer of data and commands between a host and a target storage device. This transport can use existing Fibre Channel infrastructure upgraded to support NVMe.

To access the NVMe over Fibre Channel storage, install a Fibre Channel storage adapter that supports NVMe on your ESXi host. You do not need to configure the adapter. It automatically connects to an appropriate NVMe subsystem and discovers all shared NVMe storage devices that it can reach. You can later reconfigure the adapter and disconnect its controllers or connect other controllers that were not available during the host boot. For more information, see Add Controllers for NVMe over Fabrics.

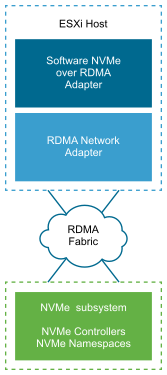

- NVMe over RDMA (RoCE v2)

-

This technology uses a remote direct memory access (RDMA) transport between two systems on the network. The transport enables data exchange in the main memory bypassing the operating system or the processor of either system.

ESXi supports RDMA over Converged Ethernet v2 (RoCE v2) technology, which enables a remote direct memory access over an Ethernet network.

To access storage, the ESXi host uses an RDMA network adapter installed on your host and a software NVMe over RDMA storage adapter. You must configure both adapters to use them for storage discovery. For more information, see Configuring NVMe over RDMA (RoCE v2) on ESXi.

- NVMe over TCP

-

This technology uses Ethernet connections between two systems. To access storage, the ESXi host uses a network adapter installed on your host and a software NVMe over TCP storage adapter. You must configure both adapters to use them for storage discovery. For more information, see Configuring NVMe over TCP on ESXi.