This topic illustrates an example of how not to use service level indicators (SLIs).

After reading Use Case 4, Use Case 5, and Best Practice 1, you can see that a latency value, for example, 261 ms, is much easier to meet if it is a p50 latency compared to a p99 latency threshold. This is because fewer response times need to be under a p50 latency compared to a p99 latency, which requires more.

It does not make sense to set the following SLIs for a single service:

p99 latency: 110 ms

p90 latency: 177 ms

p50 latency: 262 ms

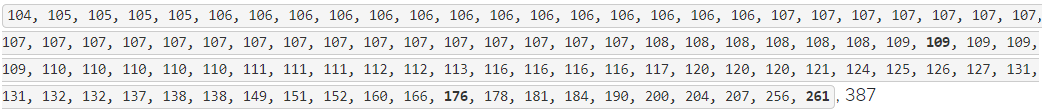

Why? Consider again the sorted dataset used in Best Practice 1, Best Practice 2, and Best Practice 3 where the numbers in bold represent the p50 (109 ms), p90 (176 ms), and p99 (261 ms) latencies.

Let’s see what happens if an SLI is set using a p99 latency threshold value of 110 ms. Given this dataset, the service would be considered unhealthy because the actual p99 latency of 261 ms is much greater than the threshold of 110 ms.

How about if an SLI was set to be a p90 latency threshold value of 177 ms? Given this dataset, the service would also be considered healthy (but barely) because the actual p90 latency is 176 ms, which is less than the 177 ms threshold.

And finally, is the service healthy if the SLI was set to have a p50 latency threshold value of 262 ms? Yes, because the actual p50 latency is 109 ms, a value much lower than the 262 ms threshold.

Setting SLIs as previously listed does not make sense because the most stringent latency value (110 ms) is expected of the greatest percentage of requests (99 percent), while the least stringent latency value (262 ms) is expected of the least percentage of requests (50 percent). The more difficult standard is exceedingly difficult while the easiest standard becomes even easier to meet.

You can set high standards, for example, an SLI using a p99 latency of 110 ms, but don’t set the p90 and p50 latencies values to be higher than the p99 latency threshold. Set those latencies to lower values so that they won’t become irrelevant.