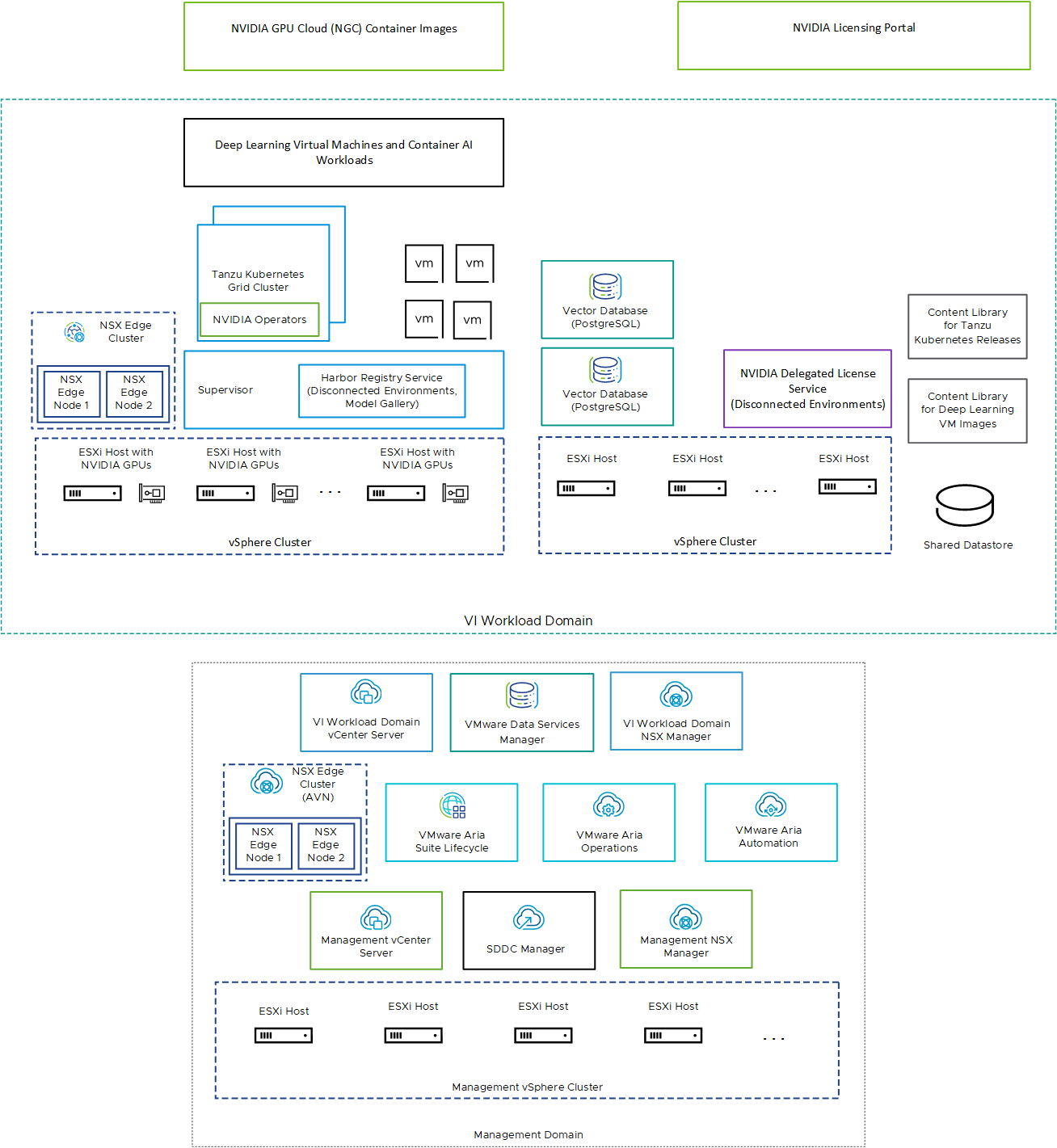

VMware Private AI Foundation with NVIDIA runs on top of VMware Cloud Foundation adding support for AI workloads in VI workload domains with vSphere IaaS control plane provisioned by using kubectl and VMware Aria Automation .

| Component | Description |

|---|---|

| GPU-enabled ESXi hosts | ESXi hosts that are configured in the following way:

|

| Supervisor | One or more vSphere clusters enabled for vSphere IaaS control plane so that you can run virtual machines and containers on vSphere by using the Kubernetes API. A Supervisor is a Kubernetes cluster itself, serving as the control plane to manage workload clusters and virtual machines. |

| Harbor registry | You can use a Harbor registry in the following cases:

|

| NSX Edge cluster | A cluster of NSX Edge nodes that provides 2-tier north-south routing for the Supervisor and the workloads it runs. The Tier-0 gateway on the NSX Edge cluster is in active-active mode. |

| NVIDIA Operators |

|

| Vector database |

|

|

You use the NVIDIA Licensing Portal to generate a client configuration token to assign a license to the guest vGPU driver in the deep learning virtual machine and the GPU Operators on TKG clusters. In a disconnected environment or to have your workloads getting license information without using an Internet connection, you host the NVIDIA licenses locally on a Delegated License Service (DLS) appliance. |

| Content library | Content libraries store the images for the deep learning virtual machines and for the Tanzu Kubernetes releases. You use these images for AI workload deployment within the VMware Private AI Foundation with NVIDIA environment. In a connected environment, content libraries pull their content from VMware managed public content libraries. In a disconnected environment, you must upload the required images manually or pull them from an internal content library mirror server. |

| NVIDIA GPU Cloud (NGC) catalog | A portal for GPU-optimized containers for AI, and machine learning that are tested and ready to run on supported NVIDIA GPUs on premises on top of VMware Private AI Foundation with NVIDIA. |

As a cloud administrator, you use the management components in VMware Cloud Foundation in the following way:

| Management Component | Description |

|---|---|

| Management vCenter Server | Manage the ESXi hosts that are running the management components of the SDDC and support integration with other solutions for monitoring and management of the virtual infrastructure. |

| Management NSX Manager | Provide networking services to the management workloads in VMware Cloud Foundation. |

| SDDC Manager |

|

| VI Workload Domain vCenter Server | Enable and configure a Supervisor. |

| VI Workload Domain NSX Manager | SDDC Manager uses this NSX Manager to deploy and update NSX Edge clusters. |

| NSX Edge Cluster (AVN) | Place the VMware Aria Suite components on a pre-defined configuration of NSX segments, called application virtual networks (AVNs), for dynamic routing and load balancing. |

| VMware Aria Suite Lifecycle | Deploy and update VMware Aria Automation and VMware Aria Operations. |

| VMware Aria Automation | Add self-service catalog items for deploying AI workloads for DevOps engineers, data scientists, and MLOps engineers. |

| VMware Aria Operations | Monitor the GPU consumption in the GPU-enabled workload domains. |

| VMware Data Services Manager | Create vector databases, such as a PostgreSQL database with pgvector extension. |