The Edge Pod components provide the fabric for the North-South connectivity to the provider networks. Multiple configurations can be used for performance, scale, and Edge services.

Edge Nodes

An Edge Node is the appliance that provides physical NICs to connect to the physical infrastructure and to the virtual domain. Edge Nodes serve as pools of capacity, dedicated to running network services that cannot be distributed to the hypervisors. The network functionality of the Edge node includes:

-

Connectivity to physical infrastructure.

-

Edge services such as NAT, DHCP, firewall, and load balancer.

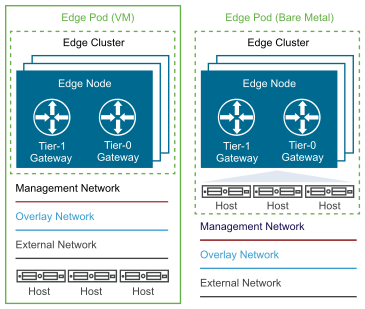

Edge nodes are available in two form-factors: VM and bare metal. Both leverage the data plane development kit (DPDK) for faster packet processing and high performance. Depending on the use case, the appropriate form-factor is deployed.

The NSX-T Data Center bare metal Edge runs on a physical server and is installed by using an ISO file or PXE boot. The bare metal Edge is recommended for production environments where services like NAT, firewall, and load balancer are needed in addition to Layer 3 unicast forwarding. A bare metal Edge differs from the VM form-factor Edge in terms of performance. It provides sub-second convergence, faster failover, and throughput greater than 10Gbps.

The NSX-T Data Center VM Edge in VM form-factor is installed by using an OVA, OVF, or ISO file. Depending on the required functionality, there are deployment-specific VM form-factors.

Edge Clusters

Edge nodes are deployed as pools of capacity (a cluster), dedicated to running network services that cannot be distributed to the hypervisors. An Edge cluster can either be all VM or all bare metal form-factors.

The Edge cluster provides scale out, redundant, and high-throughput gateway functionality for logical networks. Scale out from the logical networks to the Edge nodes is achieved by using ECMP. There is total flexibility in assigning gateways to Edge nodes and clusters. Tier-0 and Tier-1 gateways can be hosted on either same or different Edge clusters. Centralized services must be enabled for the Tier-1 gateway to coexist in the same cluster.

There can be only one Tier-0 gateway per Edge node, however multiple Tier-1 gateways can be hosted on one Edge node.

In addition to providing distributed routing capabilities, the Еdge cluster enables Еdge services at a provider or tenant scope. When one of these Еdge services is configured or an uplink is defined on the gateway to connect to the physical infrastructure, a Service Router (SR) is instantiated on the Edge Node. The Edge Node is also a transport node just like compute nodes in NSX-T, and similar to compute node it can connect to more than one transport zone, one for overlay and other for N-S peering with external devices.

A maximum of eight Edge Nodes can be grouped in an Edge cluster. A Tier-0 gateway supports a maximum of eight equal cost paths, thus a maximum of eight Edge Nodes are supported for ECMP. Edge Nodes in an Edge cluster run Bidirectional Forwarding Detection (BFD) on both tunnel and management networks to detect the Edge Node failure. The BFD protocol provides fast detection of failure for forwarding paths or forwarding engines, improving convergence. Bare metal form factors can support sub-second convergence.

NSX-T Data Center supports static routing and the dynamic routing protocol BGP on Tier-0 gateways on interfaces connecting to upstream routers. Tier-1 gateways support static routes but do not support any dynamic routing protocols.

See the NSX Design Guide for more information.