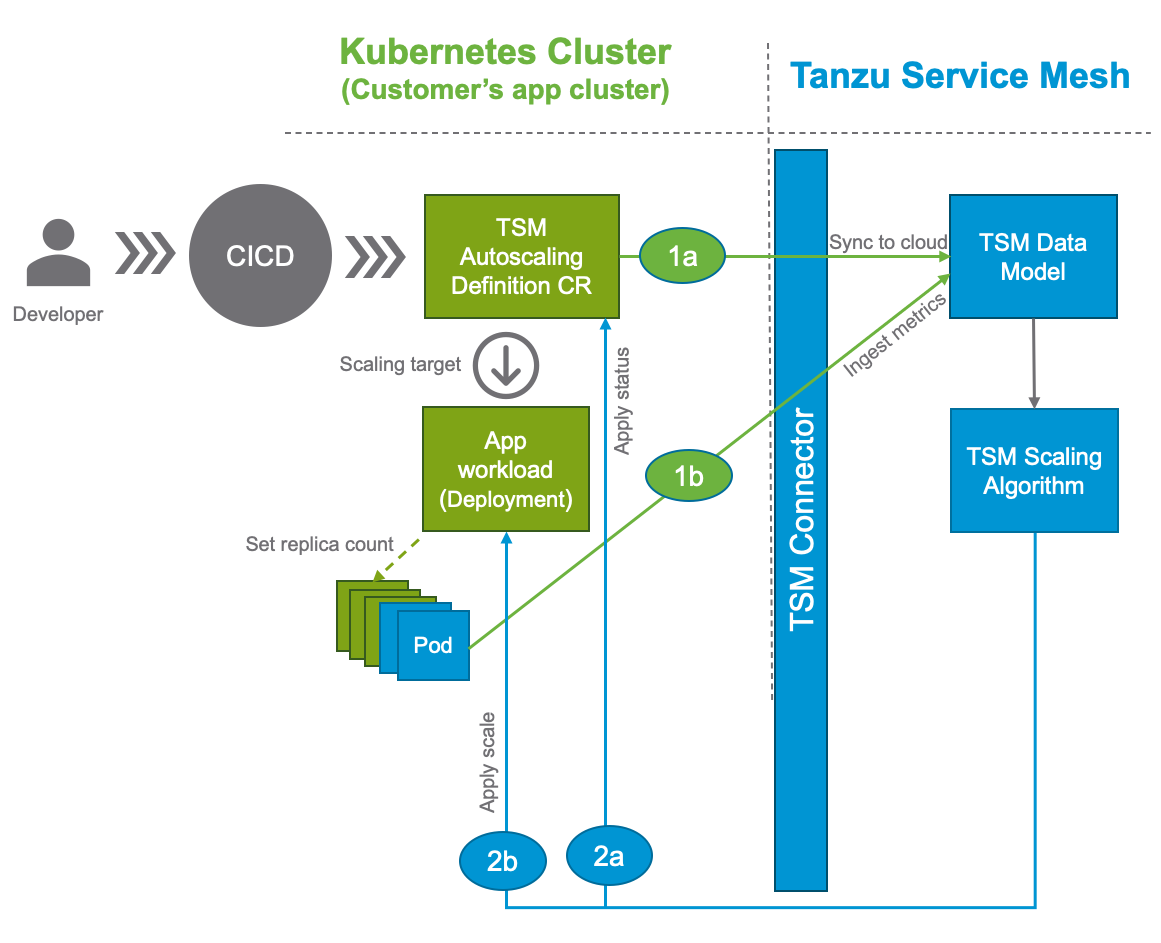

You can configure the Tanzu Service Mesh Service Autoscaler using a Definition custom resource. The Definition custom resource can be part of an automated continuous integration and continuous delivery (CICD) process or can be directly edited by a developer.

Tanzu Service Mesh Service Autoscaler Control Flow Diagram (CRD)

Changing the Definition custom resource will lead to it being saved into the Tanzu Service Mesh Data Model (1a) through the Tanzu Service Mesh Connector. The Tanzu Service Mesh Connector connects Tanzu Service Mesh to Kubernetes.

The Tanzu Service Mesh Data Model retrieves metrics from pods (1b), and an autoscaling decision will be made based on the metrics. Example metrics includes latencies, error rates, CPU usage, and memory usage.

The Tanzu Service Mesh Scaling algorithm takes information from the Tanzu Service Mesh Data Model and applies the current status to the Definition custom resource (2a). The Tanzu Service Mesh scaling algorithm also applies the desired scaling to the workload controller (for example, Deployment, StatefulSet, ReplicaSet) (2b).

The workload controller in turn controls the number of pods running.

The Definition custom resource indirectly affects the scaling target of the workload controller.

Tanzu Service Mesh Service Autoscaler Control Flow Diagram (GNS-Scoped)

You can configure the Tanzu Service Mesh Service Autoscaler via UI or API.

The GNS-scoped autoscaling policy is saved into the Tanzu Service Mesh Data Model (2) through the Tanzu Service Mesh API. The Tanzu Service Mesh Data Model retrieves metrics from pods (1), and an autoscaling decision will be made based on the metrics. Example metrics included latencies, error rates, CPU usage, and memory usage.

The Tanzu Service Mesh Scaling algorithm takes information from the Tanzu Service Mesh Data Model and applies the desired scaling to the workload controller (for example, Deployment, StatefulSet, ReplicaSet) (3). The Tanzu Service Mesh Scaling algorithm does this to each cluster that’s part of the GNS.

The workload controller in turn controls the number of pods running.